Category Archives: architecture

A simpler sourcing maturity assessment approach

Knowing how to procure your IT services, software and hardware is a vital function in any organisation. Assessing one’s maturity in this aspect can be complex, which is why SURF developed a simpler approach.

There are a number of perspectives to take on IT and its place in an organisation, but for further and higher education institutions, the procurement or sourcing of services – in the widest sense of the word ‘services’ – may be among the most important ones. With the ongoing move to cloud provisioning, determining where a particular service is going to come from and how it is managed is crucial.

What could a GPS for learner journeys look like?

Last weekend, a motley crew of designers, students, developers, business and government people came together in Edinburgh to prototype designs and apps to help learners manage their journeys. With help, I built a prototype that showed how curriculum and course offering data can be combined with e-portfolios to help learners find their way.

The first official Scottish government data jam, facilitated by Snook and supported by TechCube, is part of a wider project to help people navigate the various education and employment options in life, particularly post 16. The jam was meant to provide a way to quickly prototype a wide range of ideas around the learner journey theme.

While many other teams at the jam built things like a prototype social network, or great visualisations to help guide learners through their options, we decided to use the data that was provided to help see what an infrastructure could look like that supported the apps the others were building.

In a nutshell, I wanted to see whether a mash-up of open data in open standard formats could help answer questions like:

- Where is the learner in their journey?

- Where can we suggest they go next?

- What can help them get there?

- Who can help or inspire them?

Here’s a slide deck that outlines the results. For those interested in the nuts and bolts read on to learn more about how we got there.

Where is the learner?

To show how you can map where someone is on their learning journey, I made up an e-portfolio. Following an excellent suggestion by Lizzy Brotherstone of the Scottish Government, I nicked a story about ‘Ryan’ from an Education Scotland website on learner journeys. I recorded his journey in a Mahara e-portfolio, because it outputs data in the standard LEAP2a format- I could have used PebblePad as well for the same reason.

I then transformed the LEAP2a XML into very rough but usable RDF using a basic stylesheet I made earlier. Why RDF? Because it makes it easy for me to mash up the portfolios with other datasets; other data formats would also work. The made-up curriculum identifiers were added manually to the RDF, but could easily have been taken from the LEAP2a XML with a bit more time.

Where can we suggest they go next?

I expected that the Curriculum for Excellence would provide the basic structure to guide Ryan from his school qualifications to a college course. Not so, or at least, not entirely. The Scottish Qualifications Framework gives a good idea of how courses relate in terms of levels (i.e. from basic to a PhD and everything in between), but there’s little to join subjects. After a day of head scratching, I decided to match courses to Ryan’s qualifications by level and comparing the text of titles. We ought to be able to do better than that!

The course data set was provided to us was a mixture of course descriptions from the Scottish Qualifications Authority, and actual running courses offered by Scottish colleges all in one CSV file. During the jam, Devon Walshe of TechCube made a very comprehensive data set of all courses that you should check out, but too late for me. I had a brief look at using XCRI feeds like the ones from Adam Smith college too, but went with the original CSV in the end. I tried using LOD Refine to convert the CSV to RDF, but it got stuck on editing the RDF harness for some reason. Fortunately, the main OpenRefine version of the same tool worked its usual magic, and four made-up SQA URIs later, we were in business.

This query takes the email of Ryan as a unique identifier, then finds his qualification subjects and level. That’s compared to all courses from the data jam course data set, and whittled down to those courses that match Ryan’s qualifications and are above the level he already has.

The result: too many hits, including ones that are in subjects that he’s unlikely to be interested in.

So let’s throw in his interests as well. Result: two courses that are ideal for Ryan’s skills, but are a little above his level. So we find out all the sensible courses that can take him to his goal.

What can help them get there?

One other quirk about the curriculum for excellence appears to be that there are subject taxonomies, but they differ per level. Intralect implemented a very nice one that can be used to tag resources up to level 3 (we think). So Intralect’s Janek exported the vocabulary in two CSV files, which I imported in my triple store. He then built a little web service in a few hours that takes the outcome of this query, and returns a list of all relevant resources in the Intralibrary digital repository for stuff that Ryan has already learned, but may want to revisit.

Who can help or inspire them?

It’s always easier to have someone along for the journey, or to ask someone who’s been before you. That’s why I made a second e-portfolio for Paula. Paula is a year older than Ryan, is from a different, but nearby school, and has done the same qualifications. She’s picked the same qualification as a goal that we suggested to Ryan, and has entered it as a goal on her e-portfolio. Ryan can get it touch with her over email.

This query takes the course suggested to Ryan, and matches it someone else’s stated academic goal, and reports on what she’s done, what school she’s from, and her contact details.

Conclusion

For those parts of the Curriculum for Excellence for which experiences and outcomes have been defined, it’d be very easy to be very precise about progression, future options, and what resources would be particularly helpful for a particular learner at a particular part of the journey. For the crucial post 16 years, this is not really possible in the same way right now, though it’s arguable that its all the more important to have solid guidance at that stage.

Some judicious information architecture would make a lot more possible without necessarily changing the syllabus across the board. Just a model that connects subject areas across the levels, and school and college tracks would make more robust learner journey guidance possible. Statements that clarify which course is an absolute pre-requisite for another, and which are suggested as likely or preferable would make it better still.

We have the beginnings of a map for learner journeys, but we’re not there yet.

Other than that, I think agreed identifiers and data formats for curriculum parts, electronic portfolios or transcripts and course offerings can enable a whole range of powerful apps of the type that others at the data jam built, and more. Thanks to standards, we can do that without having to rely on a single source of truth or a massive system that is a single point of failure.

Find out all about the other great hacks on the learner journey data jam website.

All the data and bits of code I used are available on github

eTextBooks Europe

I went to a meeting for stakeholders interested in the eTernity (European textbook reusability networking and interoperability) initiative. The hope is that eTernity will be a project of the CEN Workshop on Learning Technologies with the objective of gathering requirements and proposing a framework to provide European input to ongoing work by ISO/IEC JTC 1/SC36, WG6 & WG4 on eTextBooks (which is currently based around Chinese and Korean specifications). Incidentally, as part of the ISO work there is a questionnaire asking for information that will be used to help decide what that standard should include. I would encourage anyone interested to fill it in.

The stakeholders present represented many perspectives from throughout Europe: publishers, publishing industry specification bodies (e.g. IPDF who own EPUB3, and DAISY), national bodies with some sort of remit for educational technology, and elearning specification and standardisation organisations. I gave a short presentation on the OER perspective.

Many issues were raised through the course of the day, including (in no particular order)

- Interactive and multimedia content in eTextbooks

- Accessibility of eTextbooks

- eTextbooks shouldn’t be monolithic and immutable chunks of content, it should be possible to link directly to specific locations or to disaggregate the content

- The lifecycle of an eTextbook. This goes beyond initial authoring and publishing

- Quality assurance (of content and pedagogic approach)

- Alignment with specific curricula

- Personalization and adaptation to individual needs and requirements

- The ability to describe the learning pathway embodied in an eTextbook, and vary either the content used on this pathway or to provide different pathways through the same content

- The ability to describe a range IPR and licensing arrangements of the whole and of specific components of the eTextbook

- The ability to interact with learning systems with data flowing in both directions

If you’re thinking that sounds like a list of the educational technology issues that we have been busy with for the last decade or two, then I would agree with you. Furthermore, there is a decade or two’s worth of educational technology specs and standards that address these issues. Of course not all of those specs and standards are necessarily the right ones for now, and there are others that have more traction within digital publishing. EPUB3 was well represented in the meeting (DITA is the other publishing standard mentioned in the eTernity documentation, but no one was at the meeting to talk about that) and it doesn’t seem impossible to meet the educational requirements outlined in the meeting within the general EPUB3 framework. The question is which issues should be prioritised and how should they be addressed.

Of course a technical standard is only an enabler: it doesn’t in itself make any change to teaching and learning; change will only happen if developers create tools and authors create resources that exploit the standard. For various reasons that hasn’t happened with some of the existing specs and standards. A technical standard can facilitate change but there needs to a will or a necessity to change in the first place. One thing that made me hopeful about this was a point made by Owen White of Pearson that he did not to think of the business he is in as being centred around content creation and publishing but around education and learning and that leads away from the view of eBooks as isolated static aggregations.

For more information keep an eye on the eTernity website

VLE commodification is complete as Blackboard starts supporting Moodle and Sakai

Unthinkable a couple of years ago, and it still feels a bit April 1st: Blackboard has taken over the Moodlerooms and NetSpot Moodle support companies in the US and Australia. Arguably as important is that they have also taken on Sakai and IMS luminary Charles Severance to head up Sakai development within Blackboard’s new Open Source Services department. The life of the Angel VLE Blackboard acquired a while ago has also been extended.

For those of us who saw Blackboard’s aggressive acquisition of commercial competitors WebCT and Angel, and seen the patent litigation they unleashed against Desire 2 Learn, the idea of Blackboard pledging to be a good open source citizen may seem a bit … unsettling, if not 1984ish.

But it has been clear for a while that Blackboard’s old strategy of ‘owning the market’ just wasn’t going to work. Whatever the unique features are that Blackboard has over Moodle and Sakai, they aren’t enough to convince every institution to pay for the license. Choosing between VLEs was largely about price and service, not functionality. Even for those institutions where price and service were not an issue, many departments had sometimes not entirely functional reasons for sticking with one or another VLE that wasn’t Blackboard.

In other words, the VLE had become a commodity. Everyone needs one, and they are fairly predictable in their functionality, and there is not that much between them, much as I’ve outlined in the past.

So it seems Blackboard have wisely decided to switch focus from charging for IP to becoming a provider of learning tool services. As Blackboard’s George Kroner noted, “It does kinda feel like @Blackboard is becoming a services company a la IBM under Gerstner”

And just as IBM has become quite a champion of Open Source Software, there is no reason to believe that Blackboard will be any different. Even if only because the projects will not go away, whatever they do to the support companies they have just taken over. Besides, ‘open’ matters to the education sector.

Interoperability

Blackboard had already abandoned extreme lock-in by investing quite a bit in open interoperability standards, mostly through the IMS specifications. That is, users of the latest versions of Blackboard can get their data, content and external tool connections out more easily than in the past- it’s no longer as much of a reason to stick with them.

Providing services across the vast majority of VLEs (outside of continental Europe at least) means that Blackboard has even more of an incentive to make interoperability work across them all. Dr Chuck Severance’s appointment also strongly hints at that.

This might need a bit of watching. Even though the very different codebases, and a vested interest in openness, means that Blackboard sponsored interoperability solutions – whether arrived at through IMS or not – are likely to be applicable to other tools, this is not guaranteed. There might be a temptation to cut corners to make things work quickly between just Blackboard Learn, Angel, Moodle 1.9/2.x and Sakai 2.x.

On the other hand, the more pressing interoperability problems are not so much between the commodified VLEs anymore, they are between VLEs and external learning tools and administrative systems. And making that work may just have become much easier.

The Blackboard press releases on Blackboard’s website.

Dr Chuck Severance’s post on his new role.

“Open Higher Education”, a scenario from the TEL-Map UK HE cluster

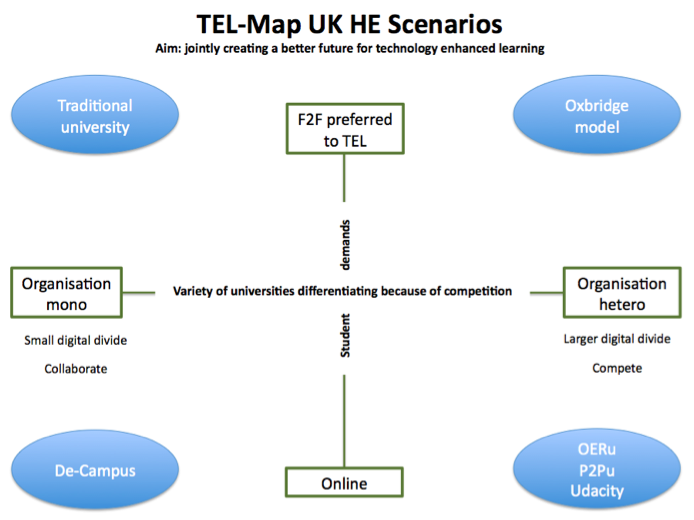

At the “Emerging Reality: Making sense new models of learning organisation” workshop at the CETIS conference 2012, Bill Olivier from Institute of Educational Cybernetics at the University of Bolton presented a scenario of “ Open Higher Education” which was developed by a group of participants from the UK HE sector. Having been involved in the UK OER programme, looking at the trends and development around OERs and open education in HE, it was really interesting to see this scenario emerging as one of the outcomes of the meeting of a UK HE cluster through the modified Future Search Method adopted by the TEL-Map project.

During a meeting at Nottingham, prior to the CETIS conference, the TEL-Map UK HE cluster identified some 80 trends and drivers impacting on the future of TEL in UK higher education. The group rated them for Impact/Importance and consolidated the high impact, high uncertainty trends and drivers into two overarching but mutually independent axes: ‘Variety of universities’ and ‘Student demands’. This cross-impact analysis resulted in these two axes placed to develop four context scenarios, namely Oxbridge Model, Traditional University Model, De-Campus Model and Open-Ed Model.

The Open Higher Education scenario was identified from the bottom right quadrant of the scenario diagram above. In this scenario, emerging leaders include the OERu, P2Pu and Udacity. The common features of this scenario’s learning model include low cost content and peer learning support, with expert support when it is needed. Initially, students choose this form of HE because they don’t have to pay for the services provided by universities that they cannot benefit from, such as sports halls, students societies, classrooms and libraries, etc. This model expands as more and more people find online university courses affordable & practical and more students see its benefits, including those who would have attended traditional university.

Along with the Open Higher Education scenario, three other thought-provoking and interesting scenarios were presented and discussed, including, Christian Voigt’s “Technology Supported Learning Design”; Adam Cooper’s “The Network of Society of Scholars” and New Models of LearningideascaleTEL-Map project website

Approaches to building interoperability and their pros and cons

System A needs to talk to System B. Standards are the ideal to achieve that, but pragmatics often dictate otherwise. Let’s have a look at what approaches there are, and their pros and cons.

When I looked at the general area of interoperability a while ago, I observed that useful technology becomes ubiquitous and predictable enough over time for the interoperability problem to go away. The route to get to such commodification is largely down to which party – vendors, customers, domain representatives – is most powerful and what their interests are. Which describes the process very nicely, but doesn’t help solve the problem of connecting stuff now.

So I thought I’d try to list what the choices are, and what their main pros and cons are:

A priori, global

Also known as de jure standardisation. Experts, user representatives and possibly vendor representatives get together to codify whole or part of a service interface between systems that are emerging or don’t exist yet; it can concern either the syntax, semantics or transport of data. Intended to facilitate the building of innovative systems.

Pros:

- Has the potential to save a lot of money and time in systems development

- Facilitates easy, cheap integration

- Facilitates structured management of network over time

Cons:

- Viability depends on the business model of all relevant vendors

- Fairly unlikely to fit either actually available data or integration needs very well

A priori, local

i.e. some type of Service Oriented Architecture (SOA). Local experts design an architecture that codifies syntax, semantics and operations into services. Usually built into agents that connect to each other via an ESB.

Pros:

- Can be tuned for locally available data and to meet local needs

- Facilitates structured management of network over time

- Speeds up changes in the network (relative to ad hoc, local)

Cons:

- Requires major and continuous governance effort

- Requires upfront investment

- Integration of a new system still takes time and effort

Ad hoc, local

Custom integration of whatever is on an institution’s network by the institution’s experts in order to solve a pressing problem. Usually built on top of existing systems using whichever technology is to hand.

Pros:

- Solves the problem of the problem owner fastest in the here and now.

- Results accurately reflect the data that is actually there, and the solutions that are really needed

Cons:

- Non-transferable beyond local network

- Needs to be redone every time something changes on the local network (considerable friction and cost for new integrations)

- Can create hard to manage complexity

Ad hoc, global

Custom integration between two separate systems, done by one or both vendors. Usually built as a separate feature or piece of software on top of an existing system.

Pros:

- Fast point-to-point integration

- Reasonable to expect upgrades for future changes

Cons:

- Depends on business relations between vendors

- Increases vendor lock-in

- Can create hard to manage complexity locally

- May not meet all needs, particularly cross-system BI

Post hoc, global

Also known as standardisation, consortium style. Service provider and consumer vendors get together to codify a whole service interface between existing systems; syntax, semantics, transport. The resulting specs usually get built into systems.

Pros:

- Facilitates easy, cheap integration

- Facilitates structured management of network over time

Cons:

- Takes a long time to start, and is slow to adapt

- Depends on business model of all relevant vendors

- Liable to fit either available data or integration needs poorly

Clearly, no approach offers instant nirvana, but it does make me wonder whether there are ways of combining approaches such that we can connect short term gain with long term goals. I suspect if we could close-couple what we learn from ad hoc, local integration solutions to the design of post-hoc, global solutions, we could improve both approaches.

Let me know if I missed anything!

Business Adopts Archi Modelling Tool

Many technologies and tools in use in universities and colleges are not developed for educational settings. In the classroom particularly teachers have become skilled at applying new technologies such as Twitter to educational tasks. But technology also plays a crucial role behind the scenes in any educational organisation in supporting and managing learning, and like classroom tools these technologies are not always developed with education in mind. So it is refreshing to find an example of an application developed for UK Higher and Further education being adopted by the commercial sector.

Archi is an open source ArchiMate modelling tool developed as part of JISC’s Flexible Service Delivery programme to help educational institutions take their first steps in enterprise architecture modelling. ArchiMate is a modelling language hosted by the Open Group who describe it as “a common language for describing the construction and operation of business processes, organizational structures, information flows, IT systems, and technical infrastructure”. Archi enforces all the rules of ArchiMate so that the only relationships that can be established are those allowed by the language.

Since the release of version 1.0 in June 2010 Archi has built up a large user base and now gets in excess of 1000 downloads per month. Of course universities and colleges are not the only organisations that need a better understanding of their internal business processes, we spoke to Phil Beauvoir, Archi developer at JISC CETIS, about the tool and why it has a growing number of users in the commercial world.

Christina Smart (CS): Can you start by giving us a bit of background about Archi and why was it developed?

Phil Beauvoir (PB): In summer of 2009 Adam Cooper asked whether I was interested in developing an ArchiMate modelling tool. Some of the original JISC Flexible Service Delivery projects had started to look at their institutional enterprise architectures, and wanted to start modelling. Some projects had invested in proprietary tools, such as BiZZdesign’s Architect, and it was felt that it would be a good idea to provide an open source alternative. Alex Hawker (the FSD Programme manager) decided to invest six months of funding to develop a proof of concept tool to model using the ArchiMate language. The tool would be aimed at the beginner, be open source, cross-platform and would have limited functionality. I started development on Archi in earnest in January 2010 and by April had the first alpha version 0.7 ready. Version 1.0 was released in June 2010, it grew from there.

CS: How would you describe Archi?

PB: The web site describes Archi as: “A free, open source, cross platform, desktop application that allows you to create and draw models using the ArchiMate language”. Users who can’t afford proprietary software, would use standard drawing tools such as Omnigraffle or Visio for modelling. Archi is positioned somewhere between those drawing tools and a tool like BiZZdesign’s Architect. It doesn’t have all the functionality and enterprise features of the BiZZdesign tool, but it has more than just plain drawing tools. Archi also has hints and helps and user assistance technology built into it, so when you’re drawing elements there are certain ArchiMate rules about which connections you can make, if you try to make a connection that’s not allowed you get an explanation why not. So for the beginner it is a great way to start understanding ArchiMate. We keep the explanations simple because we aim to make things easier for those users who beginners in ArchiMate. As the main developer I try to keep Archi simple, because there’s always a danger that you can keep adding on features and that would make it unusable. I try to steer a course between usability and features.

Archi screenshot

Another aspect of Archi is the way it supports the modelling conversation. Modelling is not done in isolation; it’s about capturing a conversation between key stakeholders in an organisation. Archi allows you to sketch a model and take notes in a Sketch View before you add the ArchiMate enterprise modelling rules. A lot of people use the Sketch View. It enables a capture of a conversation, the “soft modelling” stage before undertaking “hard modelling”.

CS: How many people are using it within the Flexible Service Delivery programme?

PB: I’m not sure, I know the King’s College, Staffordshire and Liverpool John Moores projects were using it. Some of the FSD projects tended to use both Architect and Archi. If they already had one licence for BiZZdesign Architect they would carry on using it for their main architect, whereas other “satellite” users in the institution would use Archi.

CS: Archi has a growing number of users outside education, who are they and how did they discover Archi?

PB: Well the first version was released in June 2010, and people in the FSD programme were using it. Then in July 2010 I got an email from a large Fortune 500 insurance company in the US, saying they really liked the tool and would consider sponsoring Archi if we implemented a new feature. I implemented the feature anyway and we’ve built up the relationship with them since then. I know that this company has in the region of 100 enterprise architects and they’ve rolled Archi out as their standard enterprise architecture modelling tool.

I am also aware of other commercial companies using it, but how did they discover it? Well I think it’s been viral. A lot of businesses spend a lot of money advertising and pushing products, but the alternate strategy is pull, when customers come to you. Archi is of the pull variety, because there is a need out there, we haven’t had to do very much marketing, people seem to have found Archi on their own. Also the TOGAF (The Open Group Architecture Framework) developed by the Open Group is becoming very popular and I guess Archi is useful for people adopting TOGAF.

In 2010 BiZZdesign were I think concerned about Archi being a competitor in the modelling tool space. However now they’re even considering offering training days on Archi, because Archi has become the de facto free enterprise modelling tool. Archi will never be a competitor to BiZZdesign’s Architect, they have lots of developers and there’s only me working on Archi, it would be nuts to try to compete. So we will focus on the aspects of Archi that make it unique, the learning aspects, the focus on beginners and the ease of use, and clearly forge out a path between the two sets of tools.

Many people will start with Archi and then upgrade to BiZZdesign’s Architect, so we’re working on that upgrade path now.

CS: Why do you think it is so popular with business users?

PB: I’m end-user driven, for me Archi is about the experience of the end users, ensuring that the experience is first class and that it “just works”. It’s popular with business users firstly because it’s free, secondly because it works on all platforms, thirdly because it’s aimed at those making their first steps with ArchiMate.

CS: What is the immediate future for Archi?

PB: We’re seeking sponsorship deals and other models of sustainability because obviously JISC can’t go on supporting it forever. One of the models of sustainability is to get Archi adopted by something like the Eclipse Foundation. But you have to be careful that development continues in those foundations, because there is a risk of it becoming a software graveyard, if you don’t have the committers who are prepared to give their time. There is a vendor who has expressed an interest in collaborating with us to make sure that Archi has a future.

Lots of software companies now have service business models, so you provide the tool for free but charge for providing services on top of the free tool. The Archi tool will always be free, anyone could package it up and sell it. I know they’re doing that in China because I’ve had emails from people doing it, they’ve translated it and are selling it and that’s ok because that’s what the licence model allows.

In terms of development we’re adding on some new functionality. A new concept of a Business Model Canvas is becoming popular, where you sketch out your new business models. The canvas is essentially a nine box grid which you add various key partners, stakeholders etc to. We’re adding a canvas construction kit to Archi, so people can design their own canvas for new business models. The canvas construction kit is aimed at the high level discussions that people have when they start modelling their organisations.

CS: You’ve developed a number of successful applications for the education sector over the years, including, Colloquia, Reload and ReCourse, how do you feel the long term future for Archi compares with those?

PB: Colloquia was the first tool I developed back in 1998, and I don’t really think it’s used anymore. But really Colloquia was more a proof of concept to demonstrate that you could create a learning environment around the conversational model, which supported learning in a different way from the VLEs that were emerging at the time. Its longevity has been as a forerunner to social networking and to the concept of the Personal Learning Environment.

Reload was a set of tools for doing content packaging and SCORM. They’re not meant for teachers, but they’re still being used.

The ReCourse Learning Design tool developed for a very niche audience of those people developing scripted learning designs.

I think the long term future for Archi is better than those, partly because there’s a very large active community using it, and partly because it can be used by all enterprises and isn’t just a specific tool for the education sector. I think Archi has an exciting future.

User feedback

Phil has received some very positive feedback about Archi via email from JISC projects as well as those working in the commercial world.

JISC projects

“The feeling I get from Archi is that it’s helping me to create shapes, link and position them rather than jumping around dictating how I can work with it. And the models look much nicer too… I think Archi will allow people to investigate EA modelling cost free to see whether it works for them, something that’s not possible at the moment.”

“So why is Archi significant? It is an open source tool funded by JISC based on the ArchiMate language that achieves enough of the potential of a tool like BiZZdesign Architect to make it a good choice for relatively small enterprises, like the University of Bolton to develop their modelling capacity without a significant software outlay.” [15] Stephen Powell from the Co-educate project (JISC Curriculum Design Programme).

Commercial

“I’m new to EA world, but Archi 1.1 makes me fill like at home! So easy to use and so exciting…”

“Version 1.3 looks great! We are rolling Archi out to all our architects next week. The ones who have tried it so far all love it.”

Find Out More

If this interview has whetted your appetite, more information about Archi, and the newly released version 2.0 is available at http://archi.cetis.org.uk. For those in the north, there will be an opportunity to see Archi demonstrated at the forthcoming 2nd ArchiMate Modelling Bash being held in St Andrews on the 1st and 2nd November.

ArchiMate modelling bash outcomes

What’s more effective than taking two days out and focus on a new practice with peers and experts?

Following the JISC’s FSD programme, an increasing number of UK Universities started to use the ArchiMate Enterprise Architecture modelling language. Some people have had some introductions to the language and its uses, others even formal training in it, others still visited colleagues who were slightly further down the road. But there was a desire to take the practice further for everyone.

For that reason, Nathalie Czechowski of Coventry University took the initiative to invite anyone with an interest in ArchiMate modelling (not just UK HE), to come to Coventry for a concentrated two days together. The aims were:

1) Some agreed modelling principles

2) Some idea whether we’ll continue with an ArchiMate modeller group and have future events, and in what form

3) The models themselves

With regard to 1), work is now underway to codify some principles in a document, a metamodel and an example architecture. These principles are based on the existing Coventry University standards and the Twente University metamodel, and the primary aim of them is to facilitate good practice by enabling sharing of, and comparability between, models from different institutions.

With regard to 2), the feeling of the ‘bash participants was that it was well worth sustaining the initiative and organise another bash in about six months’ time. The means of staying in touch in the mean time have yet to be established, but one will be found.

As to 3), a total of 15 models were made or tweaked and shared over the two days. Varying from some state of the art, generally applicable samples to rapidly developed models of real life processes in universities, they demonstrate the diversity of the participants and their concerns.

All models and the emerging community guidelines are available on the FSD PBS wiki.

Jan Casteels also blogged about the event on Enterprise Architect @ Work