Yes, you can now download it from our main website!

Author Archives: feedforward

Hey, where’s the server gone?

Just about the worst thing that can happen in the sprint for a major release milestone – you can’t see the server all your precious code is sat on. Has it been switched off? Has it crashed? Has the firewall blocked it? Is our code still OK?

Hopefully it’ll be up again in the morning.

Simplicity is hard

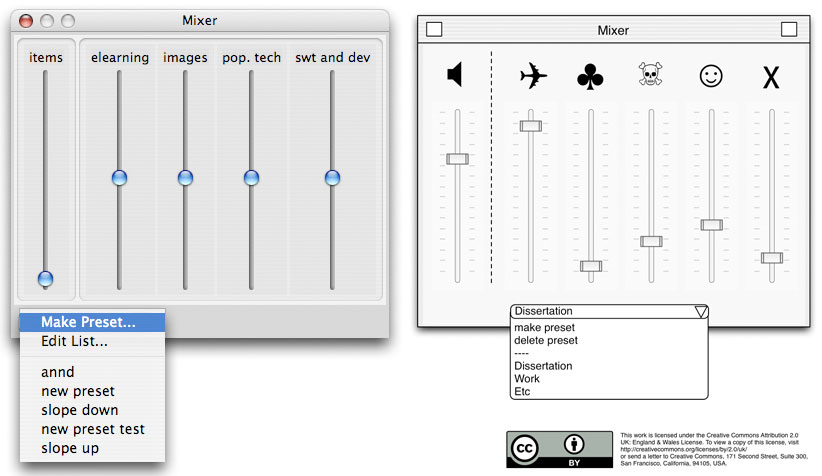

From wireframe to code: The Mixer component

Modal Mappings

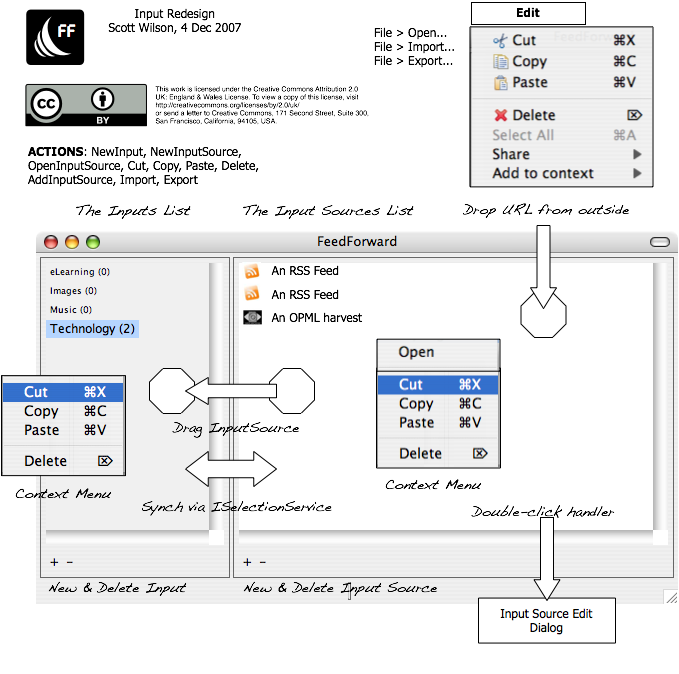

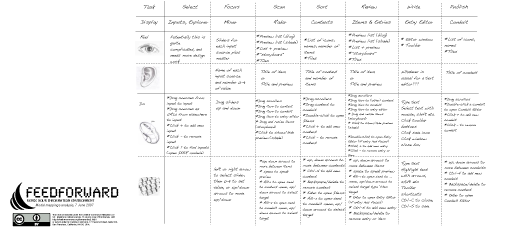

As part of the design phase I produced a map of the modes of interaction in the various application components – this again is inspired by the approach to design used by Bill Verplank.

This map takes four modes into account:

1. Feel: split into seeing and hearing

2. Do: split into mouse and keyboard

See it here (click for full version):

One thing this does is develop a feel for the different modes of access.

Actually implementing these modes is very difficult in today’s applications as things like keyboard and text-to-speech are platform-specific. So seeing the full keyboard and speech experience will have to wait until M2 at the earliest.

Getting closer

I’ve been doing some basic tidying up – adding missing icons, sorting out various kinds of drag and drop, and so on. Some bits have an unfinished look still; hopefully we’ll get time to work on that for M2. Meanwhile, I have to get to work on sorting out the Mixer.

Here’s what I have right now in my test build:

Another a-ha moment – FF as SWORD droplet

Milestone 1 Due Next Week

Don’t panic!!!

(For progess so far, see the roadmap.

SWORD

I was going to title this post with some sort of pithy remark like “live by the SWORD”, “Fallen on my own SWORD” etc., but thankfully resisted the urge.

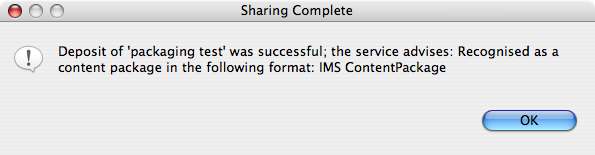

I’ve got SWORD protocol basically worked out; this was planned for M2 next April, but as there was a JISC programme meeting I worked on it early. As it was a family-wide cold/’flu/lirgy meant I couldnt go anyway. Oh well.

Still, FeedForward does now have some basic SWORD functionality, and can deposit a context (user collection) into an academic repository such as IntraLibrary (Learning objects) or ePrints (papers). This does require a bit of faffing about figuring how to render the context in some meaningful way; for IntraLibrary I just build an IMS Content Package using the Context’s contents. But what would I do, realistically, with what is basically a list of references and notes for deposit in ePrints? A skeletal paper outline?

No FF blog post is allowed without a least one image, so here it is:

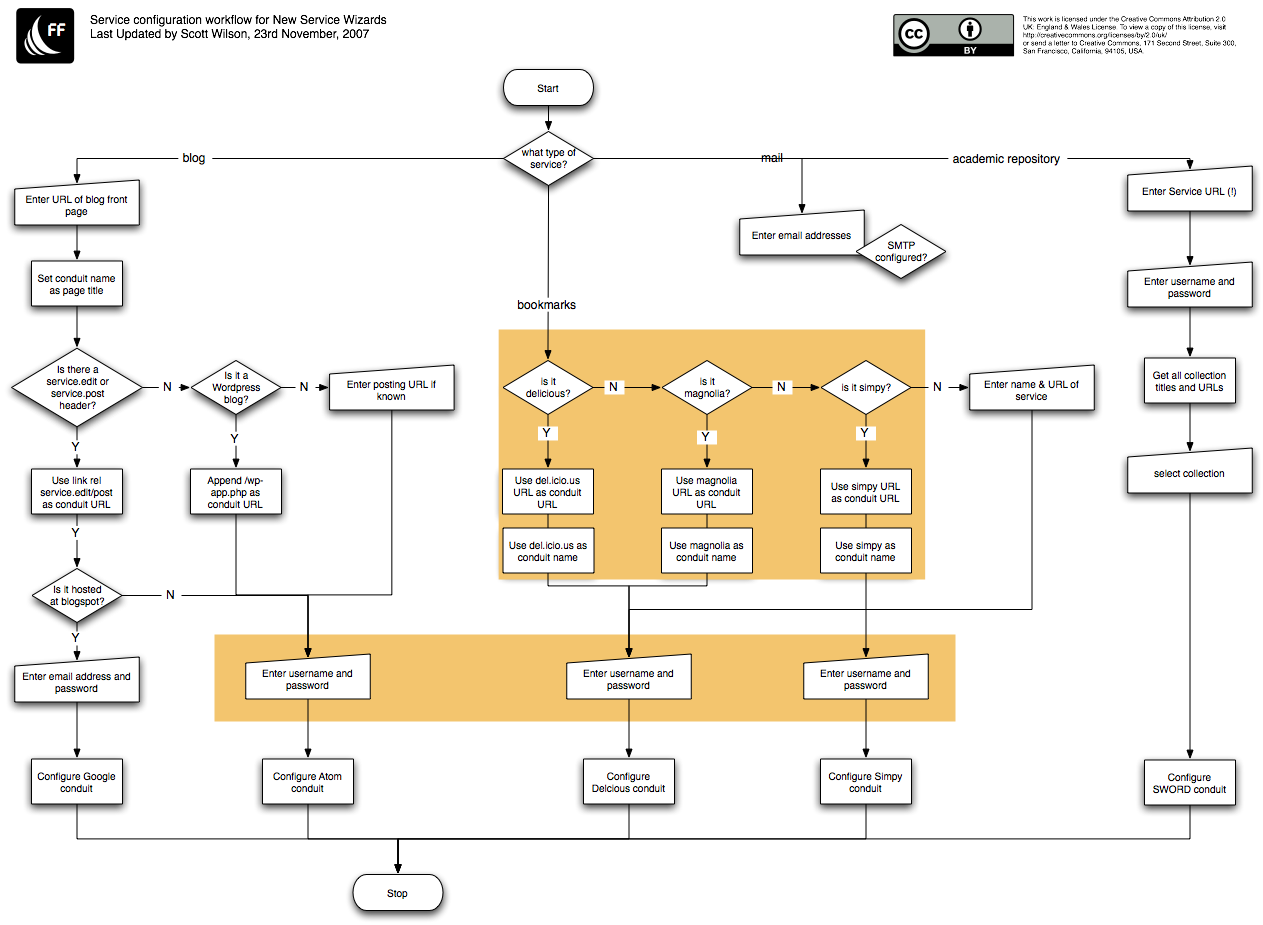

Service creation workflow

Navigating the maze of options you need to sort out for various services is quite a challenge. I’ve had to create a flowchart to keep track.

(Note SWORD is on here – I’ve got that basically working now, but its not for Release 1 as I really need to sort out dependencies as they overlap with those for other Atom code I use.)