This is a longish summary of a presentation I gave recently, covering why I was talking, the spectrum of openness, the ways of being open, the range of activities involved in education and how open things might apply to those activities. You may want to skim through until something catches your eye ![]()

Why I did this

When Marieke asked me to give a “general introduction to open education” for the Open Knowledge Foundation / LinkedUp Project Open Education Handbook booksprint I admit I was somewhat nervous. More so when I saw the invite list. I mean, I’ve worked on OERs for a few years, mostly specializing in technologies for managing their dissemination and discovery; I’ve even helped write a book about that, (which incidentally was the output of a booksprint, about which I have also written), but that only covers a small part of the OER endeavour, and OERs are only a small element in the Open Education movement, and I saw the list of invitees to the booksprint and could see names of people who knew much more than me.

However Martin Poulter then asked this on Twitter

Anyone remember who said that the way to get information on the internet is not to ask for info but to give false info? Google isn't helping

— Martin L Poulter (@mlpoulter) August 14, 2013

and I thought why not take inspiration from that approach. I can say stuff, and if it is wrong someone will put me right; it’ll be like learning about things. I like learning things, I like Open Education and I like booksprints. So this is what I said.

I wanted to emphasize that Open Education covers a wide range of activities. It has a long history, which we can see in the name of institutions like the Open University, but has recently taken on new impetus in a new direction, not disconnected with that history, but not entirely the same. Being a bit of a reductionist, the simple way to illustrate the range of Open Education was to reflect on the extent and range of meanings of Open and the range of activities that may be involved in education.

The spectrum of openness

A “map” of IP rights and freedoms to show people use and view the different “permissions” (some legal, some illegal), BY DAVID EAVES, from http://techpresident.com/news/wegov/24244/beyond-property-rights-thinking-about-moral-definitions-openness

When using, sharing and repurposing resources, teachers tend to work in the part of the spectrum spanning from proprietary through to the ignoring of property rights. It is interesting to reflect that much technical effort has been spent on facilitating the former (think Athens, Shibboleth Access Management Federation, and single sign-on solutions for identification, authentication and authorisation), political effort on legitimising some of the latter (e.g. use of orphan works, exemptions for text mining) and educational effort on avoiding what is not legitimate. One of the benefits of the OER/Open Access approach is in avoiding effort.

The ways of being open

That all focusses on open access to and use of resources, but there are other ways of being open, seen in terms such as “open development” “open practice” “open university” and even “open prison” which all have something to do with who you allow to participate in what. There is much gnashing of teeth when this sense of openness gets confused with openness of access and use; for example complaints that a standard isn’t open because it costs money or that an online course isn’t open because the resources used cannot be copied. Yes you could spend the rest of your life trying to distinguish between “open” “free” and “libre”, but in real life words don’t align with nice neat categories of meaning like that.

I don’t think participation has to be open to everyone for a process to be described as open. As with openness in access and use, openness in participation can happen to various extents: towards one end of the spectrum, participation in IMS specification development is open to anyone who pays to be a member, ISO standardization processes are open to any national standardization body; wikipedia is an obvious example of a more open approach.

This form of openness is really interesting to me because I think that through sharing the development of resources we may see an improvement in their quality. I think that the OER work to date has largely missed this. And incidentally, having a hand in the development of a resource makes someone more likely to use that resource.

Activities involved in education

I think this picture does a reasonable job of showing the range of activities that may be involved in education, and I’ll stress from the outset that they don’t all have to be, some forms of education will only involve one or two of these activities.

Running down the diagonal you have the core processes of formal education (but note well: this isn’t a waterfall project plan, I’m not saying each one happens when the other is complete): policy at a national through to institutional level on how institutions are run, for example who gets to learn what and how, and who pays for it; administration, dealing with recruitment, admissions, retention, progression, graduation, timetabling, reporting, and so on; teaching, to use an old-fashioned term to include mentoring and all non-instructivist activities around the deliberate nurturing of knowledge; learning, which may be the only necessary activity here; assessment, not just summative, but also formative and diagnostic–remember, this isn’t a waterfall; and accreditation, saying who learnt what. Around these you have academic and business topics that inform or influence these processes: politics, management studies, pedagogy, psychology, philosophy, library functions, and Human Resource functions such as recruitment and staff development.

Open Education

OER interest tends to focus on the teaching, learning, assessment nexus at the middle of this picture, but Open Education should be, and is, wider. Maybe it would be useful to try to map where some of the other open endeavours fit. Open Badges, for example sit squarely on accreditation. Open Educational Practice sits somewhere around teaching and pedagogy. Open Access to research outputs sits roughly where OER does, but also with added implications to pedagogy, psychology, management and philosophy as research fields. Open research in general sits with these research fields but is also a useful way of learning. Open data is a bit tricky since it depends what you do with it, but the linked-up veni challenge submissions showed interesting ideas around library functions such as resource discovery, and around policy and administration, and learning analytics kind of comes under teaching. Similarly with Open Source Software and Open Standards, they cover pretty much everything on the main diagonal from Admin to assessment (including library). And MOOCs? well, the openness is in admission policy, so I’ve put them there. I suspect there is a missing “open learning” that sits over learning and covers informal education and much of what the original cMOOC pioneers were interested in.

Useful?

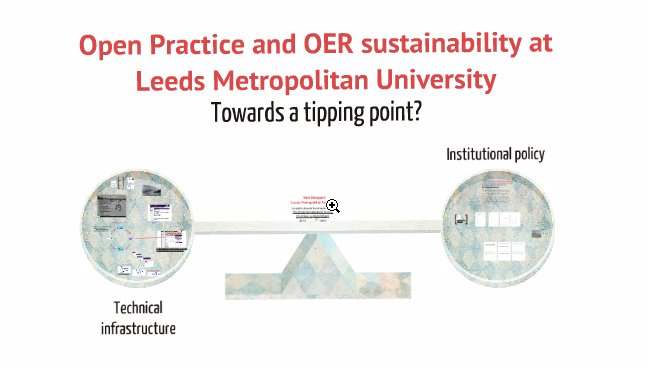

(By: Nick Sheppard, Leeds Metropolitan University)

(By: Nick Sheppard, Leeds Metropolitan University)