Following on from last week’s post on the #edcmooc, the course itself has turned to explore the notion of MOOCs in the context of utopian/dystopian views of technology and education. The questions I raised in the post are still running through my mind. However they were at a much more holistic than personal level.

This week, I’ve been really trying to think about things from my student (or learner) point of view. Are MOOCs really changing the way I engage with formal education systems? On the one hand yes, as they are allowing me (and thousands of others) to get a taste of courses from well established institutions. At a very surface level who doesn’t want to say they’ve studied at MIT/Stanford/Edinburgh? As I said last week, there’s no fee so less pressure in one sense to explore new areas and if they don’t suit you, there’s no issue in dropping out – well not for the student at this stage anyway. Perhaps in the future, through various analytical methods, serial drop outs will be recognised by “the system” and not be allowed to join courses, or have to start paying to be allowed in.

But on the other hand, is what I’m actually doing really different than what I did at school and when I was an undergraduate or was a student on “traditional’ on line, distance courses. Well no, not really. I’m reading selected papers and articles, watching videos, contributing to discussion forums – nothing I’ve not done before, or presented to me in a way that I’ve not seen before. The “go to class” button on the Coursera site does make me giggle tho’ as it’s just soo American and every time I see it I hear a disembodied American voice. But I digress.

The element of peer review for the final assignment for #edcmooc is something I’ve not done as a student, but it’s not a new concept to me. Despite more information on the site and from the team this week I’m still not sure how this will actually work, and if I’ll get my certificate of completion for just posting something online or if there is a minimum number of reviews I need to get. Like many other fellow students the final assessment is something we have been concerned about from day 1, which seemed to come as a surprise to some of the course team. During the end of week 1 google hang out, the team did try to reassure people, but surely they must have expected that we were going to go look at week 5 and “final assessment” almost before anything else? Students are very pragmatic, if there’s an assessment we want to know as soon as possible the where,when, what, why, who,how, as soon as possible. That’s how we’ve been trained (and I use that word very deliberately). Like thousands of others, my whole education career from primary school onwards centred around final grades and exams – so I want to know as much as I can so I know what to do so I can pass and get that certificate.

That overriding response to any kind of assessment can very easily over-ride any of the other softer (but just as worthy) reasons for participation and over-ride the potential of social media to connect and share on an unprecedented level.

As I’ve been reading and watching more dystopian than utopian material, and observing the general MOOC debate taking another turn with the pulling of the Georgia Tech course, I’ve been thinking a lot of the whole experimental nature of MOOCs. We are all just part of a huge experiment just now, students and course teams alike. But we’re not putting very many new elements into the mix, and our pre-determined behaviours are driving our activity. We are in a sense all just ghosts in the machine. When we do try and do something different then participation can drop dramatically. I know that I, and lots of my fellow students on #oldsmooc have struggled to actually complete project based activities.

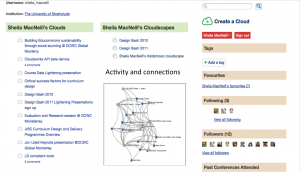

The community element of MOOCs can be fascinating, and the use of social network analysis can help to give some insights into activity, patterns of behaviour and connections. But with so many people on a course is it really possible to make and sustain meaningful connections? From a selfish point of view, having my blog picked up by the #edcmooc news feed has greatly increased my readership and more importantly I’m getting comments which is more meaningful to me than hits. I’ve tried read other posts too, but in the first week it was really difficult to keep up, so I’ve fallen back to a very pragmatic, reciprocal approach. But with so much going on you need to have strategies to cope, and there is quite a bit of activity around developing a MOOC survival kit which has come from fellow students.

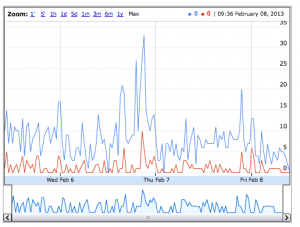

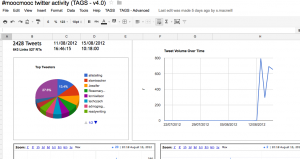

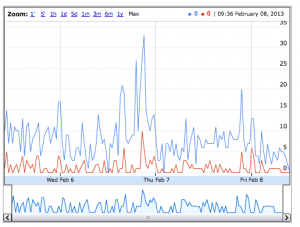

As the course develops the initial euphoria and social web activity may well be slowing down. Looking at the twitter activity it does look like it is on a downwards trend.

#edcmooc Twitter activity diagram

Monitoring this level of activity is still a challenge for the course team and students alike. This morning my colleague Martin Hawskey and I were talking about this, and speculating that maybe there are valuable lessons we in the education sector can learn from the commercial sector about managing “massive” online campaigns. Martin has also done a huge amount of work aggregating data and I’d recommend looking at his blogs. This post is a good starting point.

Listening to the google hang out session run by the #edcmooc team they again seemed to have under estimated the time sink reality of having 41,000 students in a course. Despite being upfront about not being everywhere, the temptation to look must be overwhelming. This was also echoed in the first couple of weeks of #oldsmooc. Interestingly this week there are teaching assistants and students from the MSc course actively involved in the #edcmooc.

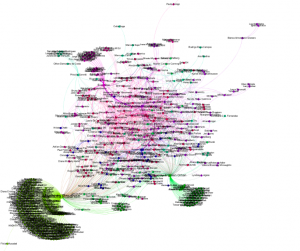

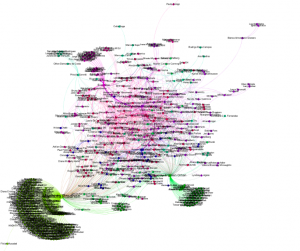

I’ve also been having a play with the data from the Facebook group. I’ve had a bit of interaction there, but not a lot. So despite it being a huge group I don’t get the impression, that apart from posting links to blogs for newsfeed, there is a lot of activity or connections. Which seems to be reflected in the graphs created from the data.

#edc Facebook group friends connections

This is a view based on friends connections. NB it was very difficult for a data novice like me to get any meaningful view of this group, but I hope that this gives the impression of the massive number of people and relative lack of connections.

There are a few more connections which can be drawn from the interactions data, and my colleagye David Sherlock manage create a view where some clusters are emerging – but with such a huge group it is difficult to read that much into the visualisation – apart from the fact that there are lots of nodes (people).

#edcmooc Facebook group interactions

I don’t think any of this is unique to #edcmooc. We’re all just learning how to design/run and participate at this level. Technology is allowing us to connect and share at a scale unimaginable even 10 years ago, if we have access to it. NB there was a very interesting comment on my

blog about us all being digital slaves.

Despite the potential affordances of access at scale it seems to me we are increasingly just perpetuating an existing system if we don’t take more time to understand the context and consequences of our online connections and communities. I don’t need to connect with 40,000 people but I do want to understand more about how, why and how I could/do. That would be a really new element to add to any course, not just MOOCs (and not something that’s just left to a course specifically about analytics). Unless that happens my primary driver will be that “completion certificate”. In this instance, and many others, to get that I don’t really need to make use of the course community. So I’m just perpetuating an existing where I know how to play the game, even if it’s appearance is somewhat disguised.

![]()