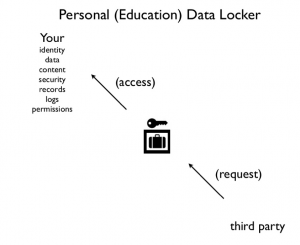

Following on from yesterday’s post, another “thought bomb” that has been running around my brain is something far closer to the core of Audrey’s “who owns your educational data?” presentation. Audrey was advocating the need for student owned personal data lockers (see screen shot below). This idea also chimes with the work of the Tin Can API project, and closer to home in the UK the MiData project. The latter is more concerned with more generic data around utility, mobile phone usage than educational data, but the data locker concept is key there too.

As you will know dear reader, I have turned into something of a MOOC-aholic of late. I am becoming increasingly interested in how I can make sense of my data, network connections in and across the courses I’m participating in and, of course, how I can access and use the data I’m creating in and across these “open” courses.

I’m currently not very active member of the current LAK13 learning analytics MOOC, but the first activity for the course is, I hope, going to help me frame some of the issues I’ve been thinking about in relation to my educational data and in turn my personal learning analytics.

Using the framework for the first assignment/task for LAK13, this is what I am going to try and do.

1. What do you want to do/understand better/solve?

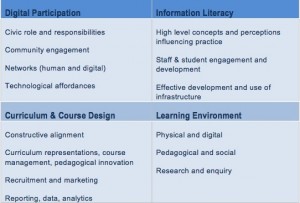

I want to compare what data about my learning activity I can access across 3 different MOOC courses and the online spaces I have interacted in on each and see if I can identify any potentially meaningful patterns, networks which would help me reflective and understand better, my learning experiences. I also want to explore see how/if learning analytics approaches could help me in terms of contributing to my personal learning environment (PLE) in relation to MOOCs, and if it is possible to illustrate the different “success” measures from each course provider in a coherent way.

2. Defining the context: what is it that you want to solve or do? Who are the people that are involved? What are social implications? Cultural?

I want to see how/if I can aggregate my data from several MOOCs in a coherent open space and see what learning analytics approaches can be of help to a learner in terms of contextualising their educational experiences across a range of platforms.

This is mainly an experiment using myself and my data. I’m hoping that it might start to raise issues from the learner’s perspective which could have implications for course design, access to data, and thoughts around student created and owned eportfolios/and or data lockers.

3. Brainstorm ideas/challenges around your problem/opportunity. How could you solve it? What are the most important variables?

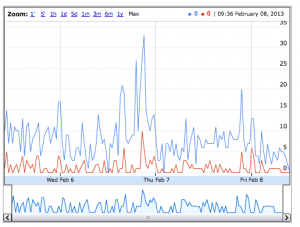

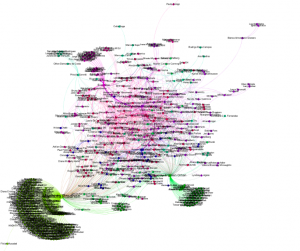

I’ve already done some initial brain storming around using SNA techniques to visualise networks and connections in the Cloudworks site which the OLDS MOOC uses. Tony Hirst has (as ever) pointed the way to some further exploration. And I’ll be following up on Martin Hawksey’s recent post about discussion group data collection .

I’m not entirely sure about the most important variables just now, but one challenge I see is actually finding myself/my data in a potentially huge data set and finding useful ways to contextualise me using those data sets.

4. Explore potential data sources. Will you have problems accessing the data? What is the shape of the data (reasonably clean? or a mess of log files that span different systems and will require time and effort to clean/integrate?) Will the data be sufficient in scope to address the problem/opportunity that you are investigating?

The main issue I see just now is going to be collecting data but I believe there some data that I can access about each MOOC. The MOOCs I have in mind are primarily #edc (coursera) and #oldsmooc (OU). One seems to be far more open in terms of potential data access points than the other.

There will be some cleaning of data required but I’m hoping I can “stand on the shoulders of giants” and re-use some google spreadsheet goodness from Martin.

I’m fairly confident that there will be enough data for me to at least understand the problems around the challenges for letting learners try and make sense of their data more.

5. Consider the aspects of the problem/opportunity that are beyond the scope of analytics. How will your analytics model respond to these analytics blind spots?

This project is far wider than just analytics as it will hopefully help me to make some more sense of the potential for analytics to help me as a learner make sense and share my learning experiences in one place that I chose. Already I see Coursera for example trying to model my interactions on their courses into a space they have designed – and I don’t really like that.

I’m thinking much more about personal aggregation points/ sources than the creation of actual data locker. However it maybe that some existing eportfolio systems could provide the basis for that.

As ever I’d welcome any feedback/suggestions.