In preparation for the this year’s Design Bash, I’ve been thinking about some of the “big” questions around learning design and what we actually want to achieve on the day.

When we first ran a design bash, 4 years ago as part of the JISC Design for Learning Programme we outlined three areas of activity /interoperability that we wanted to explore:

*System interoperability – looking at how the import and export of designs between systems can be facilitated;

*Sharing of designs – ascertaining the most effective way to export and share designs between systems;

*Describing designs – discovering the most useful representations of designs or patterns and whether they can be translated into runnable versions.

And to be fair I think these are still the valid and summarise the main areas we still need more exploration and sharing – particularly the translation into runnable versions aspect.

Over the past three years, there has been lots of progress in terms of the wider context of learning design in course and curriculum design contexts (i.e. through the JISC Curriculum Design and Delivery programmes) and also in terms of how best to support practitioners engage, develop and reflect on their practice. The evolution of the pedagogic planning tools from the Design for Learning programme into the current LDSE project being a key exemplar. We’ve also seen progress each year as a directly result of discussions at previous Design bashes e.g. embedding of LAMS sequences into Cloudworks (see my summary post from last year’s event for more details).

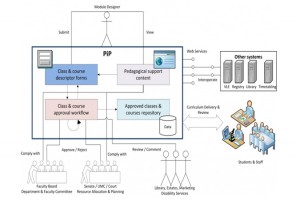

The work of the Curriculum Design projects in looking at the bigger picture in terms of the processes involved in formal curriculum design and approval processes, is making progress in bridging the gaps between formal course descriptions and representations/manifestations in such areas as course handbooks and marketing information, and what actually happens in the at the point of delivery to students. There is a growing set of tools emerging to help provide a number of representations of the curriculum. We also have a more thorough understanding of the wider business processes involved in curriculum approval as exemplified by this diagram from the PiP team, University of Strathclyde.

PiP Business Process workflow model

Given the multiple contexts we’re dealing with, how can we make the most of the day? Well I’d like to try and move away from the complexity of the PiP diagram concentrate a bit more on the “runtime” issue ie transforming and import representations/designs into systems which then can be used by students. It still takes a lot to beat the integration of design and runtime in LAMS imho. So, I’d like to see some exploration around potential workflows around the systems represented and how far inputs and outputs from each can actually go.

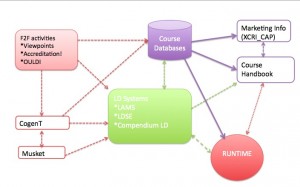

Based on some of the systems I know will be represented at the event, the diagram below makes a start at trying to illustrates some workflows we could potentially explore. N.B. This is a very simplified diagram and is meant as a starting point for discussion – it is not a complete picture.

Design Bash Workflows

So, for example, starting from some initial face to face activities such as the workshops being so successfully developed by the Viewpoints project or the Accreditation! game from the SRC project at MMU, or the various OULDI activities, what would be the next step? Could you then transform the mostly paper based information into a set of learning outcomes using the Co-genT tool? Could the file produced there then be imported into a learning design tool such as LAMS or LDSE or Compendium LD? And/ or could the file be imported to the MUSKET tool and transformed into XCRI CAP – which could then be used for marketing purposes? Can the finished design then be imported into a or a course database and/or a runtime environment such as a VLE or LAMS?

Or alternatively, working from the starting point of a course database, e.g. SRC where they have developed has a set template for all courses; would using the learning outcomes generating properties of the Co-genT tool enable staff to populate that database with “better” learning outcomes which are meaningful to the institution, teacher and student? (See this post for more information on the Co-genT toolkit).

Or another option, what is the scope for integrating some of these tools/workflows with other “hybrid” runtime environments such as Pebblepad?

These are just a few suggestions, and hopefully we will be able to start exploring some of them in more detail on the day. In the meantime if you have any thoughts/suggestions, I’d love to hear them.