No educational technology conference at the moment is complete without a bit of MOOC-ery and #lak13 was no exception. However the “Deconstructing disengagement: analyzing learner sub-populations in massive open online courses” paper was a move on from the familiar territory of broad, brush stroke big numbers towards a more nuanced view of some of the emerging patterns of learners across three Stanford based Coursera courses.

The authors have created:

” a simple, scalable, and informative classification method that identifies a small number of longitudinal engagement trajectories in MOOCs. Learners are classified based on their patterns of interaction with video lectures and assessments, the primary features of most MOOCs to date . . .”

” . . .the classifier consistently identifies four prototypical trajectories of engagement.”

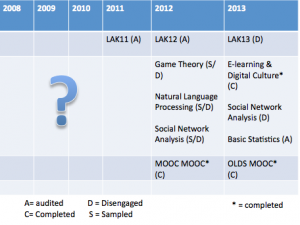

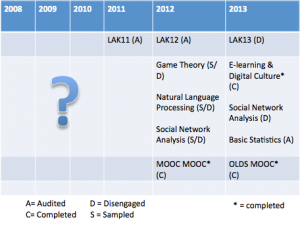

As I listened to the authors present the paper I couldn’t help but reflect on my own recent MOOC experience. Their classifier labels (auditing, completing, sampling, disengaging) made a lot of sense to me. At times I have been in all four “states” of auditing, completing, disengaging and sampling.

The study investigated typical Coursera courses which mainly take the talking head video, quiz, discussion forum, final assignment format and suggested that use of the framework to identify sub-populations of learners would allow more customisation of courses and (hopefully) more engagement and I guess ultimately completion.

I did find it interesting that they identified that completing learners were most active on forums, something that contradicts my (limited) experience. I’ve signed up for a number of the science-y type Coursera courses and have sampled and disengaged. Compare that to the recent #edcmooc which again was run through Coursera but didn’t use the talking head-quiz-forum design. Although I didn’t really engage with the discussion forums (I tried but they just “don’t do it for me”) I did feel very engaged with the content, the activities, my peers and I completed the course.

I’ve spoken to a number of fellow MOOC-ers recently and they’re not that keen on the discussion forums either. Of course, it’s highly likely that people I speak to are like me and probably interact more on their blogs and twitter than in discussion forums. Maybe its an arts/science thing ? Shorter discussions? I don’t really know, but at scale I find any discussion forum challenging, time consuming and to be completely honest a bit of a waste of time.

The other finding to emerge from the study was that completing and auditing (those that just watch the videos and don’t necessarily contribute to forums or submit assignments) sub-populations have the best experiences of the courses. Again drawing on my own experiences, I can see why this could be the case. Despite dropping out of courses, the videos I’ve watched have all been “good” in the sense that they were of a high technical quality, and the content was very clear. So I’ve watched and thought “oh, I didn’t know that/ oh, so that’s what that means? oh that’s what I need to do”. The latter being the point that I usual disengage as there is something far more pressing I need to do  But I have to say that the experience of actually completing (I’m now at 3 for that) MOOCs was far richer. Partly that was down to the interaction with my peers on each occasion, and the cMOOC ethos of each course design.

But I have to say that the experience of actually completing (I’m now at 3 for that) MOOCs was far richer. Partly that was down to the interaction with my peers on each occasion, and the cMOOC ethos of each course design.

That said, I do think the auditing, completing, disengaging, sampling labels are a very useful addition to the discourse and understanding of what is actually going on within the differing populations of learners in MOOCs.

A more detailed article on the research is available here.