The concept of the personal learning environment could helpfully be more related to the e-portfolio (e-p), as both can help informal learning of skills, competence, etc., whether these abilities are formally defined or not.

Several people at CETIS/IEC here in Bolton had a wide-ranging discussion this Thursday morning (2010-02-18), focused around the concept of the “personal learning environment” or PLE. It’s a concept that CETIS people helped develop, from the Colloquia system, around 1996, and Bill Olivier and Oleg Liber formulated in a paper in 2001 — see http://is.gd/8DWpQ . The idea is definitely related to an e-portfolio, in that an e-p can store information related to this personal learning, and the idea is generally to have portfolio information continue “life-long” across different episodes of learning.

As Scott Wilson pointed out, it may be that the PLE concept overreached itself. Even to conceive of “a” system that supports personal learning in general is hazardous, as it invites people to design a “big” system in their own mind. Inevitably, such a “big” system is impractical, and the work on PLEs that was done between, say, 2000 and 2005 has now been taken forward in different ways — Scott’s work on widgets is a good example of enabling tools with a more limited scope, but which can be joined together as needed.

We’ve seen parallel developments in the e-portfolio world. I think back to LUSID, from 1997, where the emphasis was on individuals auditing and developing their transferable / employability skills. Then increasingly we saw the emergence of portfolio tools that included more functionality: presentation to others (through the web); “social” communication and collaboration tools. Just as widgets can be seen as the dethroning of the concept of monolithic learning technology in general, so the “thin portfolio” concept (borrowing from the prior “personal information aggregation and distribution service” concept) represents the idea that you don’t need that portfolio information in one server; but that it is very helpful to have one place where one can access all “your” information, and set permissions for others to view it. This concept is only beginning to be implemented. The current PIOP 3 work plans to lay down more of the web services groundwork for this, but perhaps we should be looking over at the widgets work.

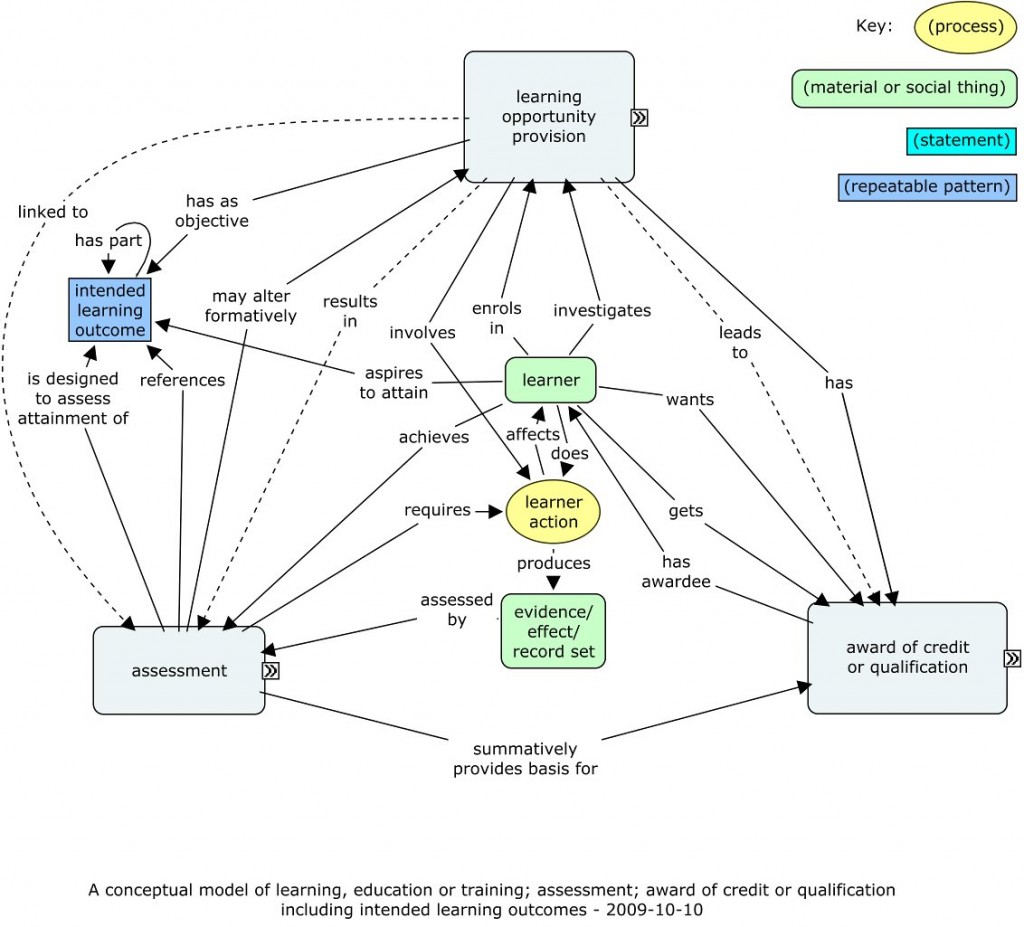

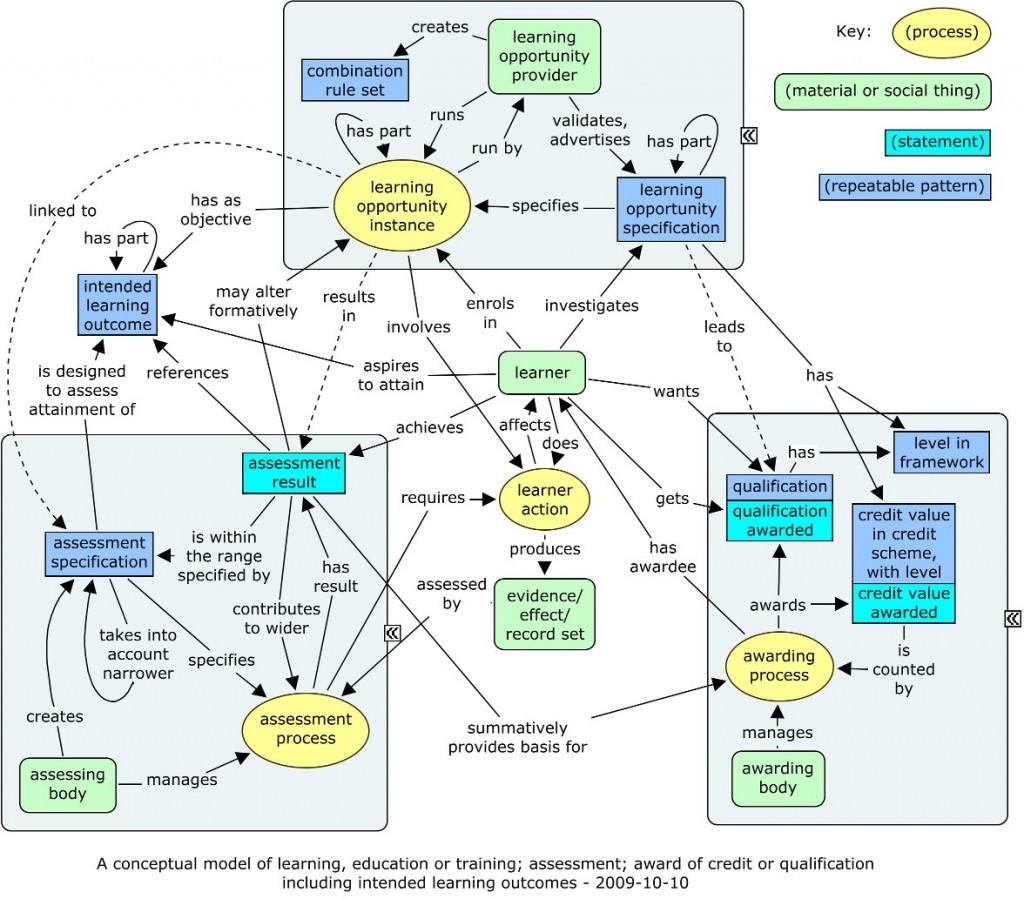

Skills and competences have long been connected with portfolio tools. Back in 1997 LUSID had a framework structure for employability skills. But what is new is the recent greatly enlarged extent of interest in learning outcomes, abilities, skills and competencies. Recent reading for eCOTOOL has revealed that the ECVET approach, as well as being firmly based on “outcomes” (which ICOPER also focuses), also recognises non-formal and informal learning as central. Thus ECVET credit is not attached only to vocational courses, but also to the accreditation of prior learning by institutions that are prepared to validate the outcomes involved. Can we, perhaps, connect with this European policy, and develop tools that are aimed at helping to implement it? It takes far sighted institutions to give up the short term gain of students enrolled on courses and instead to assess their prior learning and validate their existing abilities. But surely it makes sense in the long run, as long as standards are maintained?

If we are to have learning technology — and it really doesn’t matter if you call them PLEs, e-portfolios or whatever — that supports the acquisition or improvement of skills and competence by individuals in their own diverse ways, then surely a central organising principle within those tools needs to be the skills, competencies or whatever that the individual wants to acquire or improve. Can we draw, perhaps on the insights of PLE and related work, put them together with e-portfolio work, and focus on tools to manage the components of competence? In the IEC, we have all our experience on the TENCompetence project that has finished, as well as ICOPER that is underway and eCOTOOL that is starting. Then we expect there will be work associated with PIOP 3 that brings in frameworks of skill and competence. Few people can be in a better position to do this work that we are in CETIS/IEC.

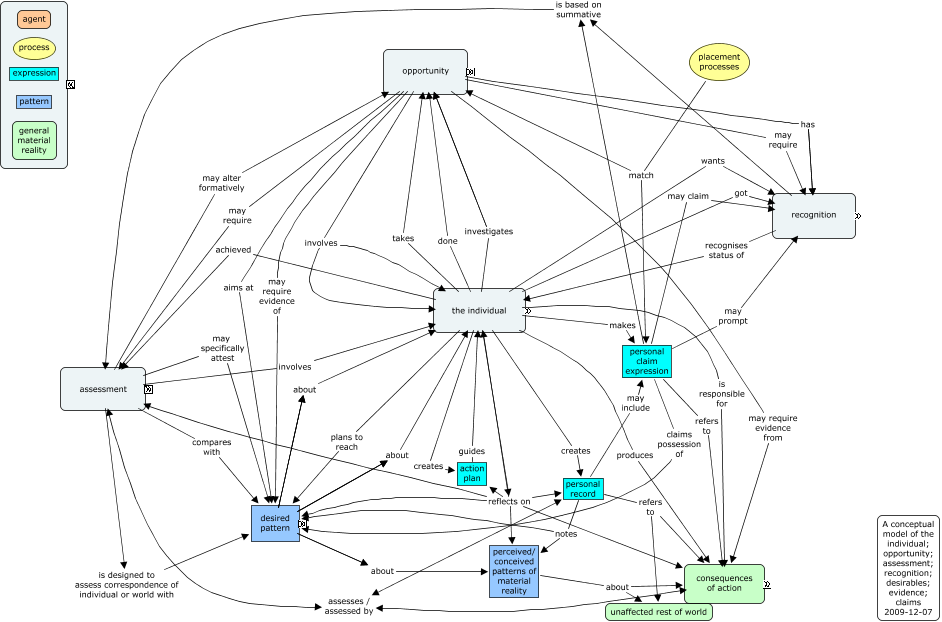

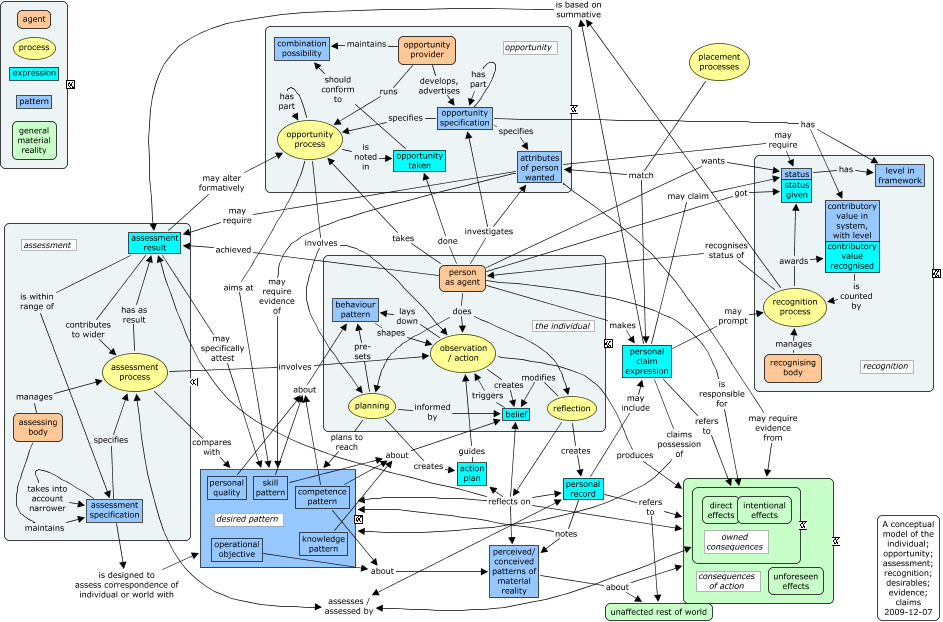

In part, I would formulate this as providing technology and tools to help people recognise their existing (uncertificated) skills, evidence them (the portfolio part) and then help them, and the institutions they attend, to assess this “prior learning” (APL) and bring it in to the world of formal recognition, and qualifications.

But I think there is another very important aspect to the technology connected with the PLE concept, and that is to provide the guidance that learners need to ensure they get on the “right” course. At the meeting, we discussed how employers often do not want the very graduates whose studies have titles that seem to related directly to the job. What has gone wrong? It’s all very well treating students like customers — “the customer is always right” — but what happens when a learner wants to take a course aimed at something one believes they are not going to be successful at? Perhaps the right intervention is to start earlier, helping learners clarify their values before their goals, understand who they are before deciding what they should do. This would be “personal learning” in the sense of learning about oneself. Perhaps the PDP part of the e-portfolio community, and those who come from careers guidance, know more about this, but even they sometimes seem not to know what to do for the best. To me, this self-knowledge requires a social dimension (with the related existing tools), and is something that needs to be able to draw on many aspects of a learner’s life (“lifewide” portfolio perhaps).

So, to reconstruct PLE ideas, not as monolithic systems, but as parts, there are two key parts in my view.

The first would be a tool for bringing together evidence residing in different systems, and organising it to provide material for reflection on, and evidence of, skills and competence across different areas of life, and integrating with institutional systems for recognising what has already been learned, as well as slotting people in to suitable learning opportunities. This would play a natural part in continuous professional development, and in the relatively short term learning education and training needs we have, which we can see we need from an existing working perspective, and thus, in the kind of workplace learning that many are predicting will need to grow.

The second may perhaps be not a tool but several tools to help people understand themselves, their values, their motives, their real goals, and the activities and employment that they would actually find satisfying, rather than what they might falsely imagine. Without this function, any learning education or training risks being wasted. Doing this seems much more challenging, but also much more deeply interesting to me.