Many technologies and tools in use in universities and colleges are not developed for educational settings. In the classroom particularly teachers have become skilled at applying new technologies such as Twitter to educational tasks. But technology also plays a crucial role behind the scenes in any educational organisation in supporting and managing learning, and like classroom tools these technologies are not always developed with education in mind. So it is refreshing to find an example of an application developed for UK Higher and Further education being adopted by the commercial sector.

Archi is an open source ArchiMate modelling tool developed as part of JISC’s Flexible Service Delivery programme to help educational institutions take their first steps in enterprise architecture modelling. ArchiMate is a modelling language hosted by the Open Group who describe it as “a common language for describing the construction and operation of business processes, organizational structures, information flows, IT systems, and technical infrastructure”. Archi enforces all the rules of ArchiMate so that the only relationships that can be established are those allowed by the language.

Since the release of version 1.0 in June 2010 Archi has built up a large user base and now gets in excess of 1000 downloads per month. Of course universities and colleges are not the only organisations that need a better understanding of their internal business processes, we spoke to Phil Beauvoir, Archi developer at JISC CETIS, about the tool and why it has a growing number of users in the commercial world.

Christina Smart (CS): Can you start by giving us a bit of background about Archi and why was it developed?

Phil Beauvoir (PB): In summer of 2009 Adam Cooper asked whether I was interested in developing an ArchiMate modelling tool. Some of the original JISC Flexible Service Delivery projects had started to look at their institutional enterprise architectures, and wanted to start modelling. Some projects had invested in proprietary tools, such as BiZZdesign’s Architect, and it was felt that it would be a good idea to provide an open source alternative. Alex Hawker (the FSD Programme manager) decided to invest six months of funding to develop a proof of concept tool to model using the ArchiMate language. The tool would be aimed at the beginner, be open source, cross-platform and would have limited functionality. I started development on Archi in earnest in January 2010 and by April had the first alpha version 0.7 ready. Version 1.0 was released in June 2010, it grew from there.

CS: How would you describe Archi?

PB: The web site describes Archi as: “A free, open source, cross platform, desktop application that allows you to create and draw models using the ArchiMate language”. Users who can’t afford proprietary software, would use standard drawing tools such as Omnigraffle or Visio for modelling. Archi is positioned somewhere between those drawing tools and a tool like BiZZdesign’s Architect. It doesn’t have all the functionality and enterprise features of the BiZZdesign tool, but it has more than just plain drawing tools. Archi also has hints and helps and user assistance technology built into it, so when you’re drawing elements there are certain ArchiMate rules about which connections you can make, if you try to make a connection that’s not allowed you get an explanation why not. So for the beginner it is a great way to start understanding ArchiMate. We keep the explanations simple because we aim to make things easier for those users who beginners in ArchiMate. As the main developer I try to keep Archi simple, because there’s always a danger that you can keep adding on features and that would make it unusable. I try to steer a course between usability and features.

Archi screenshot

Another aspect of Archi is the way it supports the modelling conversation. Modelling is not done in isolation; it’s about capturing a conversation between key stakeholders in an organisation. Archi allows you to sketch a model and take notes in a Sketch View before you add the ArchiMate enterprise modelling rules. A lot of people use the Sketch View. It enables a capture of a conversation, the “soft modelling” stage before undertaking “hard modelling”.

CS: How many people are using it within the Flexible Service Delivery programme?

PB: I’m not sure, I know the King’s College, Staffordshire and Liverpool John Moores projects were using it. Some of the FSD projects tended to use both Architect and Archi. If they already had one licence for BiZZdesign Architect they would carry on using it for their main architect, whereas other “satellite” users in the institution would use Archi.

CS: Archi has a growing number of users outside education, who are they and how did they discover Archi?

PB: Well the first version was released in June 2010, and people in the FSD programme were using it. Then in July 2010 I got an email from a large Fortune 500 insurance company in the US, saying they really liked the tool and would consider sponsoring Archi if we implemented a new feature. I implemented the feature anyway and we’ve built up the relationship with them since then. I know that this company has in the region of 100 enterprise architects and they’ve rolled Archi out as their standard enterprise architecture modelling tool.

I am also aware of other commercial companies using it, but how did they discover it? Well I think it’s been viral. A lot of businesses spend a lot of money advertising and pushing products, but the alternate strategy is pull, when customers come to you. Archi is of the pull variety, because there is a need out there, we haven’t had to do very much marketing, people seem to have found Archi on their own. Also the TOGAF (The Open Group Architecture Framework) developed by the Open Group is becoming very popular and I guess Archi is useful for people adopting TOGAF.

In 2010 BiZZdesign were I think concerned about Archi being a competitor in the modelling tool space. However now they’re even considering offering training days on Archi, because Archi has become the de facto free enterprise modelling tool. Archi will never be a competitor to BiZZdesign’s Architect, they have lots of developers and there’s only me working on Archi, it would be nuts to try to compete. So we will focus on the aspects of Archi that make it unique, the learning aspects, the focus on beginners and the ease of use, and clearly forge out a path between the two sets of tools.

Many people will start with Archi and then upgrade to BiZZdesign’s Architect, so we’re working on that upgrade path now.

CS: Why do you think it is so popular with business users?

PB: I’m end-user driven, for me Archi is about the experience of the end users, ensuring that the experience is first class and that it “just works”. It’s popular with business users firstly because it’s free, secondly because it works on all platforms, thirdly because it’s aimed at those making their first steps with ArchiMate.

CS: What is the immediate future for Archi?

PB: We’re seeking sponsorship deals and other models of sustainability because obviously JISC can’t go on supporting it forever. One of the models of sustainability is to get Archi adopted by something like the Eclipse Foundation. But you have to be careful that development continues in those foundations, because there is a risk of it becoming a software graveyard, if you don’t have the committers who are prepared to give their time. There is a vendor who has expressed an interest in collaborating with us to make sure that Archi has a future.

Lots of software companies now have service business models, so you provide the tool for free but charge for providing services on top of the free tool. The Archi tool will always be free, anyone could package it up and sell it. I know they’re doing that in China because I’ve had emails from people doing it, they’ve translated it and are selling it and that’s ok because that’s what the licence model allows.

In terms of development we’re adding on some new functionality. A new concept of a Business Model Canvas is becoming popular, where you sketch out your new business models. The canvas is essentially a nine box grid which you add various key partners, stakeholders etc to. We’re adding a canvas construction kit to Archi, so people can design their own canvas for new business models. The canvas construction kit is aimed at the high level discussions that people have when they start modelling their organisations.

CS: You’ve developed a number of successful applications for the education sector over the years, including, Colloquia, Reload and ReCourse, how do you feel the long term future for Archi compares with those?

PB: Colloquia was the first tool I developed back in 1998, and I don’t really think it’s used anymore. But really Colloquia was more a proof of concept to demonstrate that you could create a learning environment around the conversational model, which supported learning in a different way from the VLEs that were emerging at the time. Its longevity has been as a forerunner to social networking and to the concept of the Personal Learning Environment.

Reload was a set of tools for doing content packaging and SCORM. They’re not meant for teachers, but they’re still being used.

The ReCourse Learning Design tool developed for a very niche audience of those people developing scripted learning designs.

I think the long term future for Archi is better than those, partly because there’s a very large active community using it, and partly because it can be used by all enterprises and isn’t just a specific tool for the education sector. I think Archi has an exciting future.

User feedback

Phil has received some very positive feedback about Archi via email from JISC projects as well as those working in the commercial world.

JISC projects

“The feeling I get from Archi is that it’s helping me to create shapes, link and position them rather than jumping around dictating how I can work with it. And the models look much nicer too… I think Archi will allow people to investigate EA modelling cost free to see whether it works for them, something that’s not possible at the moment.”

“So why is Archi significant? It is an open source tool funded by JISC based on the ArchiMate language that achieves enough of the potential of a tool like BiZZdesign Architect to make it a good choice for relatively small enterprises, like the University of Bolton to develop their modelling capacity without a significant software outlay.” [15] Stephen Powell from the Co-educate project (JISC Curriculum Design Programme).

Commercial

“I’m new to EA world, but Archi 1.1 makes me fill like at home! So easy to use and so exciting…”

“Version 1.3 looks great! We are rolling Archi out to all our architects next week. The ones who have tried it so far all love it.”

Find Out More

If this interview has whetted your appetite, more information about Archi, and the newly released version 2.0 is available at http://archi.cetis.org.uk. For those in the north, there will be an opportunity to see Archi demonstrated at the forthcoming 2nd ArchiMate Modelling Bash being held in St Andrews on the 1st and 2nd November.

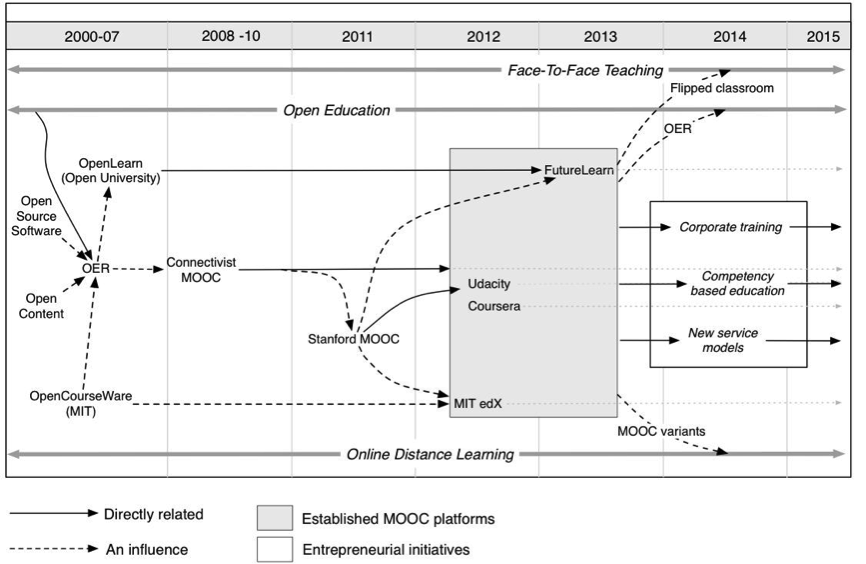

This revised version of the evolution of MOOCs was developed for our paper ‘Partnership Model for Entrepreneurial Innovation in Open Online’ now published in eLearning Papers.

Three years after the initial MOOC hype, in line with our previous analysis we looked at some possible trends and influence of MOOCs the HE system in the contexts of face-to-face teaching, open education, online distance learning, and possible business initiatives in education and training. We expanded the diagram from 2012 -2015 and explored some key ideas and trends around the following aspects:

This revised version of the evolution of MOOCs was developed for our paper ‘Partnership Model for Entrepreneurial Innovation in Open Online’ now published in eLearning Papers.

Three years after the initial MOOC hype, in line with our previous analysis we looked at some possible trends and influence of MOOCs the HE system in the contexts of face-to-face teaching, open education, online distance learning, and possible business initiatives in education and training. We expanded the diagram from 2012 -2015 and explored some key ideas and trends around the following aspects: