How do you write learning outcomes? Do you really ensure that they are meaningful to you, to you students, to your academic board? Do you sometimes cut and paste from other courses? Are they just something that has to be done and are a bit opaque but do they job?

I suspect for most people involved in the development and teaching of courses, it’s a combination of all of the above. So, how can you ensure your learning outcomes are really engaging with all your key stakeholders?

Creating meaningful discussions around developing learning outcomes with employers was the starting point for the CogenT project (funded through the JISC Life Long Learning and Workforce Development Programme). Last week I attended a workshop where the project demonstrated the online toolkit they have developed. Initially designed to help foster meaningful and creative dialogue during co-circular course developments with employers, as the tool has developed and others have started to use it, a range of uses and possibilities have emerged.

As well as fostering creative dialogue and common understanding, the team wanted to develop a way to evidence discussions for QA purposes which showed explicit mappings between the expert employer language and academic/pedagogic language and the eventual learning outcomes used in formal course documentation.

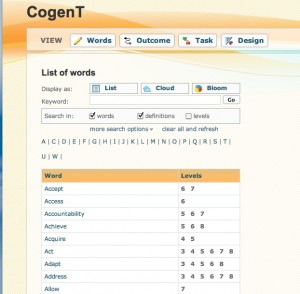

Early versions of the toolkit started with the inclusion of number of relevant (and available) frameworks and vocabularies for level descriptors, from which the team extracted and contextualised key verbs into a list view.

List view of Cogent toolkit

(Ongoing development hopes to include the import of competencies frameworks and the use of XCRI CAP.)

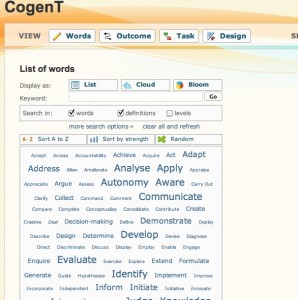

Early feedback found that the list view was a bit off-putting so the developers created a cloud view.

Cloud view of CongeT toolkit

and a Blooms view (based on Blooms Taxonomy).

Blooms View of CogenT toolkit

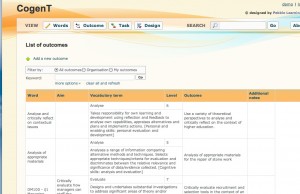

By choosing verbs, the user is directed to set of recognised learning outcomes and can start to build and customize these for their own specific purpose.

CogenT learning outcomes

As the tool uses standard frameworks, early user feedback started to highlight the potential for other uses for it such as: APEL; using it as part of HEAR reporting; using it with adult returners to education to help identify experience and skills; writing new learning outcomes and an almost natural progression to creating learning designs. Another really interesting use of the toolkit has been with learners. A case study at the University of Bedfordshire University has shown that students have found the toolkit very useful in helping them understand the differences and expectations of learning outcomes at different levels for example to paraphrase student feedback after using the tool ” I didn’t realise that evaluation at level 4 was different than evaluation at level 3″.

Unsurprisingly it was the learning design aspect that piqued my interest, and as the workshop progressed and we saw more examples of the toolkit in use, I could see it becoming another part of the the curriculum design tools and workflow jigsaw.

A number of the Design projects have revised curriculum documents now e.g. PALET and SRC, which clearly define the type of information needed to be inputted. The design workshops the Viewpoints project is running are proving to be very successful in getting people started on the course (re)design process (and like Co-genT use key verbs as discussion prompts).

So, for example I can see potential for course design teams after for taking part in a Viewpoints workshop then using the Co-genT tool to progress those outputs to specific learning outcomes (validated by the frameworks in the toolkit and/or ones they wanted to add) and then completing institutional documentation. I could also see toolkit being used in conjunction with a pedagogic planning tool such as Phoebe and the LDSE.

The Design projects could also play a useful role in helping to populate the toolkit with any competency or other recognised frameworks they are using. There could also be potential for using the toolkit as part of the development of XCRI to include more teaching and learning related information, by helping to identify common education fields through surfacing commonly used and recognised level descriptors and competencies and the potential development of identifiers for them.

Although JISC funding is now at an end, the team are continuing to refine and develop the tool and are looking for feedback. You can find out more from the project website. Paul Bailey has also written an excellent summary of the workshop.

Pingback: CongenT tool, creating, using and sharing learning outcomes « JISC Curriculum Design & Delivery

Pingback: Benefits Realisation » Realising Co-generaTive Benefits

Speaking of using ‘vocabularies for level descriptors’ and ‘contextualised key verbs’, are you aware of the MUSKET project from Middlesex? They have developed a set of tools for comparing textual documents and the concepts within them (via XCRI-CAP) and have gone some way down the semantic comparison route.

With a whole bunch of learning outcome statements, it ought to be possible to use this type of tool to come up with plausible and helpful matches to material that a course designer is working with. And with an increasing library of already analysed text, you’d get better and better help.

Although the MUSKET tools were originally designed for course description comparison, it seems there are endless potential applications – including lots in curriculum design, course advertising, APEL and others.

Hi Alan

yes I am aware of MUSKET, and should have mentioned it in the blog but thanks for reminding me. Yes, think we really need to get some people together to see what we can do with all we’ve got.

Sheila