Following that challenge, I’m going to try and summarise my experiences and reflections on the recent LAK12 conference in the five areas that seemed to resonate with me over the 4 days of the conference (including the pre conference workshop day) which are: research, vendors, assessment, ethics and students.

Research

Learning Analytics is a newly emerging research domain. This was only the second LAK conference, and to an extent the focus of the conference was on trying to establish and benchmark the domain. Aberlardo has summarised this aspect of the conference far better than I could. Although I went to the conference with an open mind, and didn’t have set expectations I was struck by the research focus of the papers, and the lack of large(r) scale implementations. Perhaps this is due to the ‘buzzy-ness’ of the term learning analytics just now (more on that in the vendor section of this post) – and is not meant in any way as a critisism of the conference or the quality of the papers, both of which were excellent. On reflection I think that the pre-conference workshops gave more of an opportunity for discuss than the traditional paper presentation with short Q&A format which the conference followed. Perhaps for LAK13 a mix of presentation formats might be included. With any domain which hopes to impact on teaching and learning there are difficulties breaching the research and practice divide and personally I find workshops give more opportunity for discussion. That said, I did see a lot of interesting presentations which did have potential, including a reintroduction to SNAPP which Lori Lockyer and Shane Dawson presented at the Learning Analytics meets Learning Design workshop; a number of very interesting presentations from the OU on various aspects of their work in research and now applying analytics; the Mirror project, an EU funded work based learning project which includes a range of digital, physical and emotional analytics and the GLASS system presented by Derek Leony, Carlos III, Madrid to name just a few.

George Seimens presented his vision(s) for the domain in his keynote (this was the first keynote I have seen where the presenter’s ideas were shared openly during the presentation – such a great example of openness in practice). There was also an informative panel session around the differences and potential synergies with the Educational Data Mining community. SOLAR (the society for learning analytics research ) is planning a series of events to continue these discussions and scoping of the domain, and we at CETIS will be involved in helping with a UK event later this year.

Vendors

There were lots of vendors around. I didn’t get any impression of any kind of hard sell, but every educational tool be it LMS/VLE/CMS now has a very large, shiny new analytics badge on it – even if what is being offered is actually the same as before, but just with parts re-labelled. I’m not sure how much (or any) of the forward thinking research that was presented will filter down into large scale tools, but I guess that’s an answer in itself for the need for the research in this area. So we in the education community can be informed and ask questions challenging the vendors and the systems they present. I was impressed with a (new to me) system called canvas analytics which colleagues from the community college sector in Washington State briefly showed me. It seems to allow flexibility and customisation of features and UI, is cloud based and so has a more distributed architecture, has CC licensing built in, and a crowd sourced feature request facility.

With so many potential sources of data it is crucial that systems are flexible and can pull and push data out to a variety of end points. This allows users – both at the institutional back end and the UI end – flexibility over what they use. CETIS have been supporting JISC to explore notions of flexible provision through a number of programmes including DVLE.

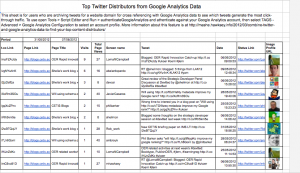

Lori Lockyer made an timely reflection on the development of learning design drawing parallels with the learning analytics. This made me immediately think of the slight misnomer of learning design, which in many cases was actually more about teaching design. With learning analytics there are similar parallels but what also crossed my mind on more than one occasion was the notion of marketing analytics as a key driver in this space. This was probably more noticeable due to the North American slant of the conference. But I was once again struck by the differences in approaches to marketing of students in North America and the UK. Universities and colleges in the US have relatively huge marketing budgets compared to us, they need to get students into their classes and keep them there. Having a system or integrated systems which manage retention numbers, and if you like the more business intelligence end of the analytics spectrum, could gain traction far more quickly than ones that are exploring the much harder to qualify effective learning analytics. Could this lead us into a similar situation with VLEs/LMSs where there was a perceived need to have one (“everyone else has got one”), vendors sold the sector something which kind of looked like it did the job? Given my comments earlier about flexibility and pervasiveness of web services, I hope not, but some dark thoughts did cross my mind and I was drawn back to Gardner Campbell’s presentation questioning some of the narrow definitions of learning analytics.

Assessment

It’s still the bottom line, and the key driver for most educational systems, and in turn analytics about those systems. Improving assessment numbers gets senior management attention. The Signals project at Purdue is one of the leading lights in the domain of learning analytics, and John Campbell and the team there have, and continue to do an excellent job of gathering data from mainly their LMS and feed it back to students in ways that do have an impact. But again, going back to Gardner Campbell’s presentation, learning analytics as a research domain is not just about assessment. So, I was heartened to see lots of references to the potential for analytics to be used in terms of measuring competencies, which I think could have potential for students as it might help to contextualise existing and newly developed/ing competencies, and allow some more flexible approaches to recognition of competencies to be developed. More opportunities to explore the context of learning and not just sell the content? Again, relating back the role of vendors, I was reminded of how content driven the North American systems is. Vendors are increasingly offering competitive alternatives for elective courses with accreditation, as well as OERs (and of course collecting the data). In terms of wider systems, I’m sure that an end to end analytics system with content and assessment all bundled in is not that far off being offered, if it isn’t already.

Ethics

Data and ethics, collect one and ignore the other at your peril! My first workshop was one run by Sharon Slade and Finella Gaphin from the OU and I have to say, I think it was a great start to the whole week (not just because we got to play snakes and ladders) as ethics and our approaches to them underline all the activity in this area. Most attention just now is focusing on issues of privacy, but there are a host of other issues including:

*power – who gets to decided what is done with the data?

*rights – does everyone have the same rights to use data? who can mine data for other purposes?

*ownership – do students own their data – what are the consequences of opt outs?

*responsibility – is there shared responsibility between institutions and students?

Doug Clow live blogged the workshop if you want more detailed information, and it is hoped that a basis for a code of conduct can be developed from the session.

Students

Last, but certainly not least, students. The student voice was at times deafening by its silence. At several points during the conference, particularly during the panel session on Building Organisational Capacity by Linda Baer and Dan Norris, I felt a growing concern about things being done “to” and not “with” students. Linda and Dan are conducting some insightful research into organisational capacity building and have already interviewed many (North American) institutions and vendors but there was very little mention of students. If learning analytics are going to really impact on learning and help transform pedagogical approaches, then shouldn’t we be talking about them to the students? What does really work for them? Are they aware of what data is being collected about them? Are they willing to let more data from informal sources e.g. Facebook, 4square etc be used in the context of learning analytics? Are they aware of their data exhaust? As well as these issues, Simon Buckingham-Schum made the very pertinent point, that if students were given access to their data, would they actually be able to do anything with it?

And also if we are collecting data about students shouldn’t we be also collecting similar data about teaching staff?

I don’t want to add yet another literacy to the seemingly never ending list, but this does tie in with the wider context of digital literacy development. Sense making of data and visualisations is key if learning analytics is to gain traction in practice, and it’s not just students who are falling short, it’s probably all of us. I saw lots of “pretty pictures” in terms of network visualisations, potential dashboard views, etc over the week – but did I really understand them? Do I have the necessary skills to properly de-code and make sense of them? Sometimes, but not all the time. I think visualisations should come with a big question mark symbol attached or overlaid – they should always raise questions. at the moment I don’t think enough people have the skills to be able to confidently question them.

Overall it was a very thought provoking week, with too much to included in one post but if you have a chance take a look at Katy Borner’s keynote Visual Analytics in Support of Education one of my highlights.

So, thanks to all the organisers for creating such a great atmosphere for sharing and learning. I’m looking forward to LAK13 and what advances will be made in the coming year and if a European location will bring some a different slant to the conference.