As the JISC funded Curriculum Design Programme is now entering its final year, the recent Programme meeting focused on effective sharing of outputs. The theme of the day was “Going beyond the obvious, talking about challenge and change”.

In the morning there were a number of breakout sessions around different methods/approaches of how to effectively tell stories from projects. I co-facilitated the “Telling the Story – representing technical change” session.

Now, as anyone who has been involved in any project that involved implementing of changing technology systems, one of the keys to success is actually not to talk too much about the technology itself – but to highlight the benefits of what it actually does/will do. Of course there are times when projects need to have in-depth technical conversations, but in terms of the wider project story, the technical details don’t need to be at the forefront. What is vital is that that the project can articulate change processes both in technical and human work-flow terms.

Each project in the programme undertook an extensive base-lining exercise to identify the processes and systems (human and technical) involved in the curriculum design process ( the PiP Process workflow model is a good example of the output of this activity).

Most projects agreed that this activity had been really useful in allowing wider conversations around the curriculum design and approval process, as there actually weren’t any formal spaces for these types of discussions. In the session there was also the feeling that actually, technology was the trojan horse around which the often trickier human process issues could be discussed. As with all educational technology related projects all projects have had issues with language and common understandings.

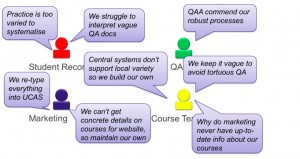

So what are the successful techniques or “stories” around communicating technical changes? Peter Bird and Rachael Forsyth from the SRC project shared their experiences with using and external consultant to run stakeholder engagement workshops around the development of a new academic database. They have also written a comprehensive case study on their experiences. The screen shot below captures some of the issues the project had to deal with – and I’m sure that this could represents views in practically any institution.

MMU have now created their new database and have a documentation which is being rolled out. You can see a version of it in the Design Studio. There was quite a bit of discussion in the group about how they managed to get a relatively minimal set of fields (5 learning outcomes, 2 assessments) – some of that was down that well known BOAFP (back of a fag packet) methodology . . .

Conversely, the PALET team at Cardiff are now having to add more fields to their programme and module forms now they are integrating with SITS and have more feedback from students. Again you can see examples of these in the Design Studio. The T-Sparc project have also undertaken extensive stakeholder engagement (in which they used a number of techniques including video which was part of another break out session) and are now starting to work with a dedicated sharepoint developer to build their new webforms. To aid collaboration the user interface will have discussion tabs and then the system will create a definitive PDF for a central document store, it will also be able to route the data into other relevant places such as course handbooks, KIS returns etc.

As you can see from the links in the text we are starting to build up a number of examples of course and module specifications in the Design Studio, and this will only grow as more projects start to share their outputs in this space over the coming year. One thing the group discussed which the support team will work with the projects to try and create is some kind of check list for course documentation creation based on the findings of all the projects. There was also a lot of discussion around the practical issues of course information management and general data management e.g. data creation, storage, workflow, versioning, instances.

As I pointed out in my previous post about the meeting, it was great to see such a lot of sharing going on in the meeting and that these experiences are now being shared via a number of routes including the Design Studio.