Following on from my pre event ponderings and questions , this post reflects on some of the outcomes from our recent Design Bash in Oxford. A quick summary post based on tweets from the day is also available.

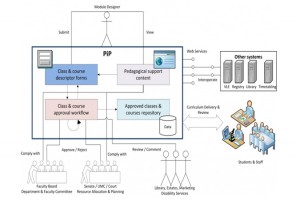

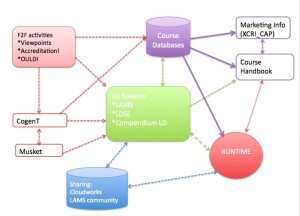

Below is an updated potential workflow(s) diagram which I created to encourage discussion around potential workflows for some of the systems represented at the event.

Potential learning design workflows

As I pointed out in my earlier post, this is not a definitive view, rather a starting point for discussion and there are obvious and quite deliberate gaps, not least the omission of content sources. As learning design is primarily about structure, process and sequencing of activities not just content, I didn’t want to make it explicit and add yet another layer of complexity to an already crowded picture. What I was keen to see was some more investigation of the links between the more staff development, face to face processes and various systems, to quote myself:

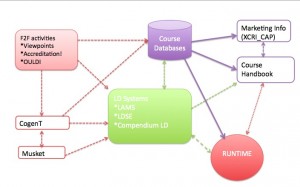

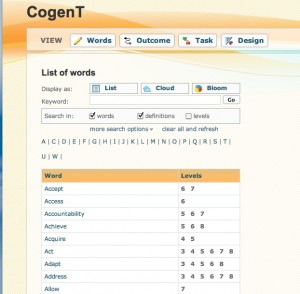

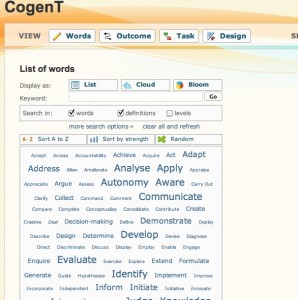

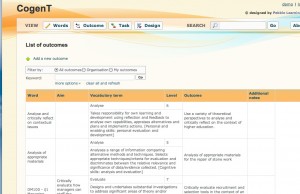

“starting from some initial face to face activities such as the workshops being so successfully developed by the Viewpoints project or the Accreditation! game from the SRC project at MMU, or the various OULDI activities, what would be the next step? Could you then transform the mostly paper based information into a set of learning outcomes using the Co-genT tool? Could the file produced there then be imported into a learning design tool such as LAMS or LDSE or Compendium LD? And/ or could the file be imported to the MUSKET tool and transformed into XCRI CAP – which could then be used for marketing purposes? Can the finished design then be imported into a or a course database and/or a runtime environment such as a VLE or LAMS? “

Well we maybe didn’t get to quite as long a chain as that, however one of the several break-out groups did identify an alternative workflow

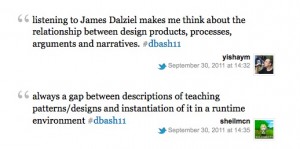

potential workflow tweet

During the lightening presentation session Alejandro Armellini (University of Leicester) gave an overview of the Carpe Diem learning design process they have developed. Ale outlined how learning design had provided a backbone for their OER work. More information on the process is available in this post.

In the afternoon James Dalziel demo’d another workflow, where he took a pattern from the LDSE Learning Designer (a “predict, observe, explain” pattern shown in the lightening session by Diana Laurillard) converted it into a LAMS sequence, shared it in the LAMS community and embedded it into Cloudworks. A full overview of how James went about this, with reflections on the process and a powerpoint walkthrough is available on Cloudworks. The recent sharing and embedding features of LAMS are another key development in re-use.

Although technical interoperability is a key driver for integrating systems, with learning design pedagogical interoperability is just as important. Sharing (and shareable) designs is akin to the holy grail for learning design research, but there is always an element of human translation needed.

thoughts on design process

However James’ demo did show how much closer we are now to being able to effectively and easily share design patterns. You can see another example of an embedded LAMS sequence here.

The day generated a lot of discussion and hopefully stimulated some new workflows for participants to work on. In terms of issues coming out of the discussions, below is a list of some of the common themes which emerged from the feedback session:

*how to effectively combine f2f activities with more formal institutional processes

*useful to see connections between module and course level designs being articulated more

*emerging interoperability of systems

*looking at potential integrations has raised even more questions

*links to OER

*capturing commonalities and mapping of vocabularies and tools, role of semantic technologies and linked data approaches

*sufacing elements of course, module, activity design and the potential impact on learners as well as teachers

*what are “good enough” descriptions/ representations of designs to allow real teachers to use them

So, plenty of food for thought. Over the coming months I’ll be working on a mapping of the process/tools/guides etc we know of in this space. I’ll initially focus on JISC funded work, so if you know of other learning design tools, or have a shareable workflow, then please let me know.