Late last month the Larnaca Declaration on Learning Design was published. Being “that time of year” I didn’t get round to blogging about it at the time. However as it’s the new year and as the OLDS mooc is starting this week, I thought it would be timely to have a quick review of the declaration.

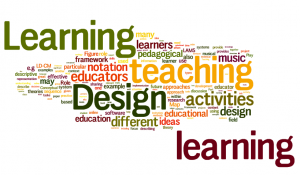

The wordle gives a flavour of the emphasis of the text.

Wordle of Larnaca Declaration on Learning Design

First off, it’s actually more of a descriptive paper on the development of research into learning design, rather than a set of statements declaring intent or a call for action. As such, it is quite a substantial document. Setting the context and sharing the outcomes of over 10 years worth of research is very useful and for anyone interested in this area I would say it is definitely worth taking the time to read it. And even for an “old hand” like me it was useful to recap on some of the background and core concepts. It states:

“This paper describes how ongoing work to develop a descriptive language for teaching and learning activities (often including the use of technology) is changing the way educators think about planning and facilitating educational activities. The ultimate goal of Learning Design is to convey great teaching ideas among educators in order to improve student learning.”

One of my main areas of involvement with learning design has been around interoperability, and the sharing of designs. Although the IMS Learning Design specification offered great promise of technical interoperability, there were a number of barriers to implementation of the full potential of the specification. And indeed expectations of what the spec actually did were somewhat over-inflated. Something I reflected on way back in 2009. However sharing of design practice and designs themselves has developed and this is something at CETIS we’ve tried to promote and move forward through our work in the JISC Design for Learning Programme, in particular with our mapping of designs report, the JISC Curriculum Design and Delivery Programmes and in our Design bashes: 2009, 2010, 2011. I was very pleased to see the Design Bashes included in the timeline of developments in the paper.

James Dalziel and the LAMS team have continually shown how designs can be easily built, run, shared and adapted. However having one language or notation system is a still goal in the field. During the past few years tho, much of the work has been concentrated on understanding the design process and how to help teachers find effective tools (online and offline) to develop new(er) approaches to teaching practice, and share those with the wider community. Viewpoints, LDSE and the OULDI projects are all good examples of this work.

The declaration uses the analogy of the development of musical notation to explain the need and aspirations of a design language which can be used to share and reproduce ideas, or in this case lessons. Whilst still a conceptual idea, this maybe one of the closest analogies with universal understanding. Developing such a notation system, is still a challenge as the paper highlights.

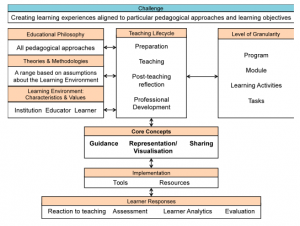

The declaration also introduces a Learning Design Conceptual Map which tries to “capture the broader education landscape and how it relates to the core concepts of Learning Design“.

Learning Design Conceptual Map

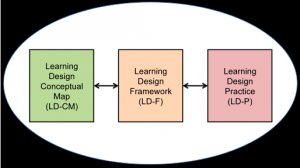

These concepts including pedagogic neutrality, pedagogic approaches/theories and methodologies, teaching lifecycle, granularity of designs, guidance and sharing. The paper puts forward these core concepts as providing the foundations of a framework for learning design which combined with the conceptual map and actual practice provides a “new synthesis for for the field of learning design” and future developments.

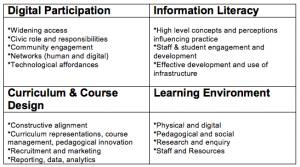

Components of the field of Learning Design

So what next? The link between learning analytics and learning design was highlighted at the recent UK SoLAR Flare meeting. Will having more data about interaction/networks be able to help develop design processes and ultimately improving the learning experience for students? What about the link with OERs? Content always needs context and using OERs effectively intrinsically means having effective learning designs, so maybe now is a good time for OER community to engage more with the learning design community.

The Declaration is a very useful summary of where the Learning Design community is to date, but what is always needed is more time for practising teachers to engage with these ideas to allow them to start engaging with the research community and the tools and methodologies which they have been developing. The Declaration alone cannot do this, but it might act as a stimulus for exisiting and future developments. I’d also be up for running another Design Bash if there is enough interest – let me know in the comments if you are interested.

The OLDS MOOC is a another great opportunity for future development too and I’m looking forward to engaging with it over the next few weeks.

Some other useful resources

*Learning Design Network Facebook page

*PDF version of the Declaration

*CETIS resources on curriculum and learning design

*JISC Design Studio