A good thing about working in JISC CETIS is being surrounded by the wide array of interests and ideas of its staff. A bad thing about working for JISC CETIS is with its distributed nature (and the fact everybody is always so busy!) it is always not possible to sit down and have a good natter about these interests.

Sheila recently blogged about social analytics and the way people share things. I enjoyed the post as I find resource sharing online a really interesting area. I increasingly find myself getting anxious about how I share things online and to which online persona ideas and resources are attached. I find myself carving out an online identity created of different levels of obscurity where I push my outputs up the levels as and when I feel more comfortable with them. I find it interesting that Christopher Poole’s latest social network allows you to work anonymously and then gives you the option to claim the work at a later date.

I left a comment on Sheila’s post and she replied back to me. First through a comment back on the post followed up by a quick skype chat. It occurred to me then that an online social structure that has worked very well for me has been the JISC CETIS blogs. An environment of regular blogging and commenting allows ideas to be shared and grow through the distributed organisation.

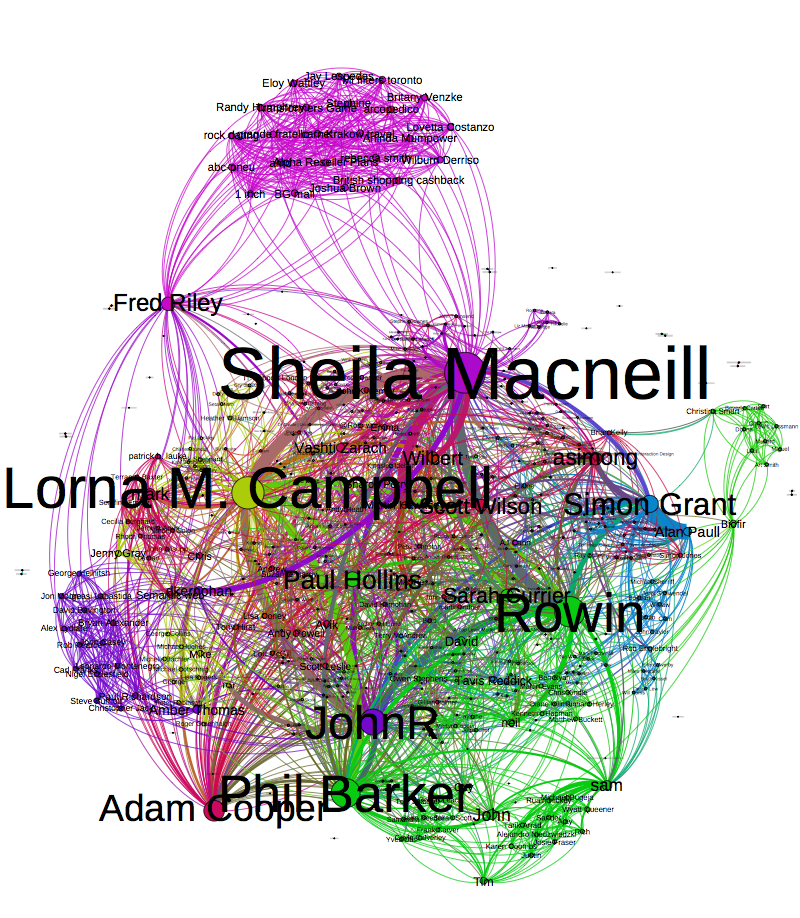

Over the past week or so I’ve been collecting data for a report and struggling with a way to analyse it. I came up with a method of turning networks I can spot in my CSV files into something network analysis tools can understand (which you can read about further down the chain of obscurity). Now that I’m obsessed with running data through the technique I thought I’d run CETIS blog authors and the conversations that join them over the method and steal Tony’s visualisation technique. I’ve removed pingbacks and such. It might not be useful but it tickles the occipital lobe.