Since going to the annual European Eifel “ePortfolio” conferences is a firm habit of mine (in fact, I have been to every single annual one so far) it seems like a good time to take stock of the e-portfolio world. All credit to Serge and Maureen and their team, they have kept the event as being the best “finger on the pulse” in this field. This year was, as last, in Maastricht. It extended to just 3 rather than 4 days, and there were apparently some hundred fewer people overall. Nevertheless, others as well as I felt that there was an even better overall feel this year. At the excellent social dinner boat trip, I was reflecting, where else can one move so quickly from discussing deeply human issues like personal development, with people who care very insightfully about people, to talking technically about the relative merits of the languages and representations used for implementation of tools and systems, with people who are highly technically competent? It makes sense for this account to take both of those tracks.

Taking the easy one first, then… We didn’t have a “plugfest” this year, which was in some ways odd: the last three years (since Cambridge, 2005) we have had some attempt at interoperability trials, even though no one was really ready for them. (People did remarkably well, considering.) But this year, when in the PIOP work with LEAP2A we really have started something that is going to work, there were no trials, just presentations. Actually I think that it is much better for being less “hyped”. By next year we should have something really solid to present and demonstrate. I presented our work at two sessions, and in both it was well received.

Not everyone likes XML schema specifications – Sampo Kellomäki enlightened me about some of the gross failings around XML – but luckily, those who aren’t so keen on XML or Atom seemed to appreciate the other side of LEAP 2.0 – the side of RDF and the Semantic Web connections, and the RDFa ideas I first understood in my work for ioNW2. It was good to have something for everyone with a technical interest.

What was disappointing was to understand more closely just what has been happening in the Netherlands. Someone must have made the decision a couple of years ago to follow “the international standard” of IMS ePortfolio, not taking account of the fact that it had not been properly tested in use. That’s how the IMS used to work (though no longer): get a spec out there quickly, get someone to implement it, and then improve towards something workable based on feedback. But though there were “implementations” of IMS eP, there was no real test of interoperability or portability. Various people we know and work with had tried it, even up to last year’s conference, so we knew many of the problems. Anyway, in the Netherlands, they have been struggling to adapt and profile that difficult spec, and despite the large amount of public funding put in to the project (too much?), most of the couple of dozen national partners have only implemented a subset even of their own limited profile. And IMS eP is not being used as an internal representation by anyone.

Fortunately, Synergetics, who have been involved in the Dutch work (despite being Belgian) have also joined our forthcoming round of PIOP work, and talk towards the end of the conference was that LEAP2A will be added to the Dutch interoperability framework. I do hope this goes through – we will support it as much as we are able. Synergetics also play a leading role in the impressive TAS3 project, so we can expect that as time goes on pathways will emerge to add security architecture to our interoperability approach. But now on to the much more humanly interesting discussions.

I had the good luck to bump into Darren Cambridge (as usual, a keynote speaker) on the evening before the conference, and we talked over some of the ideas I’ve been developing, which at the moment I label as “personal integrity reflection across contexts”. Now that needs writing about separately, but in essence it involves a way of thinking about how to promote real growth, development and change in people’s lives. We also talked about this with Samantha Slade of Percolab – Darren analysed Samantha’s e-portfolio for his forthcoming book (which will be more erudite and better written than mine!).

These discussions were the peak, but elsewhere throughout the conference I got the feeling that the time is now perhaps right to move forward more publicly with discussing values in relation to e-portfolios. Parts of my vision were expressed in Anna’s and my paper two years ago in the Oxford conference – “Ethical portfolios: supporting identities and values.” In essence, it goes like this: portfolio practice can help to develop people’s values, and their understanding of their own values; with that understanding, they can choose occupations which lead to satisfaction and fulfillment; representing those values in machine-readable form may lead to much more potent matching within the labour market – another tool towards “flexicurity”(a term introduced to me 10 minutes ago by Theo Mensen). The new expression of insight is that development of personal values, and understanding them, is supported by some kinds of reflection, and not others. The term I am trying out to point towards the most useful and powerful kind of reflection is that “personal integrity reflection across contexts”. I hope the ideas can be taken forward and presented in more depth next year.

At the conference there was also a focus on “Learning Regions” (the subject of Theo’s call), which I wasn’t able to attend much of. My view of regional initiatives has been somewhat jaded by peripheral involvement years ago with regional development agencies that seemed to have just one agenda item: inward investment. But the vision at the conference was much broader and humane. My input is quite limited. Firstly, to get anything distinctive for a region going, there needs to be a common language for the distinctive concerns (and groups of concerns) for a region. If this is done machine-readably (e.g. RDF) then there is the hope for cross linkage, not just in the labour market but beyond. Again, as in my ioNW2 work, this could well be based on clear and unambiguous URIs being set up for each concept, and possibly this could be extended to having some kind of ontology in the background. Then there is the question of two-way matching, already trialled in a small way by the Dutch public employment service (CWI).

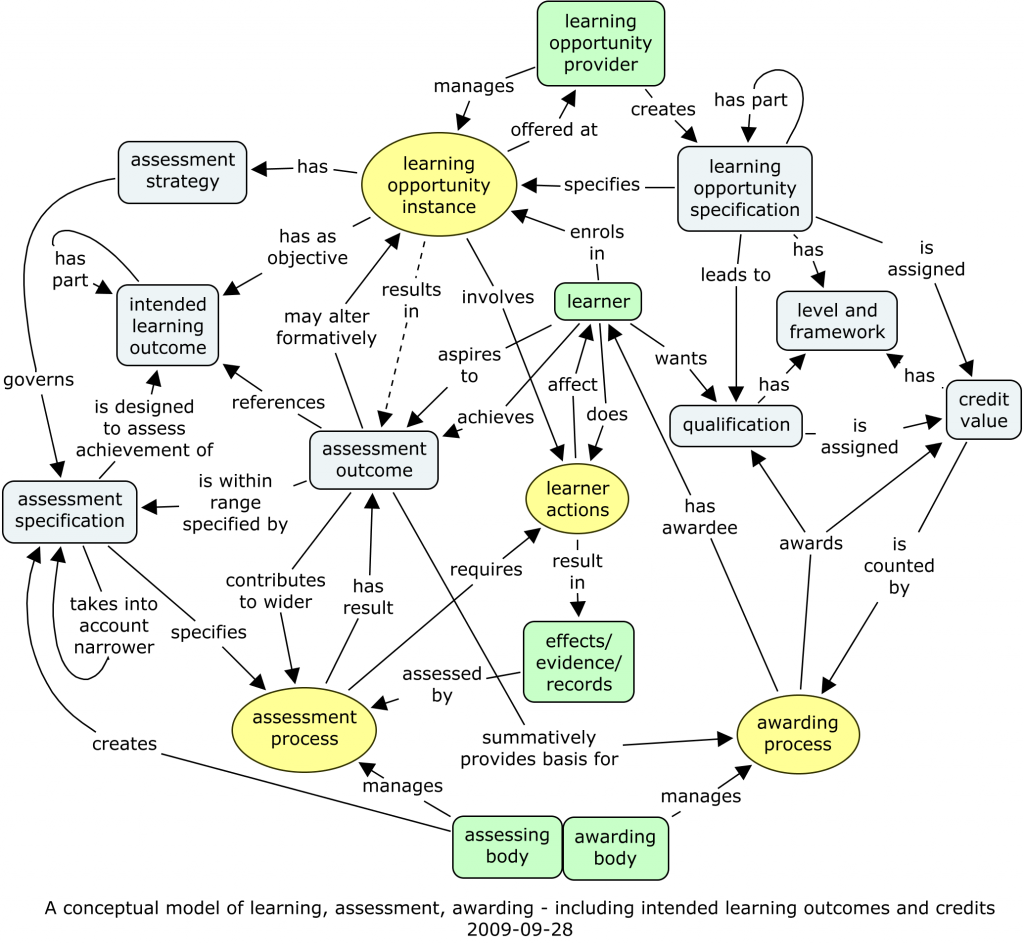

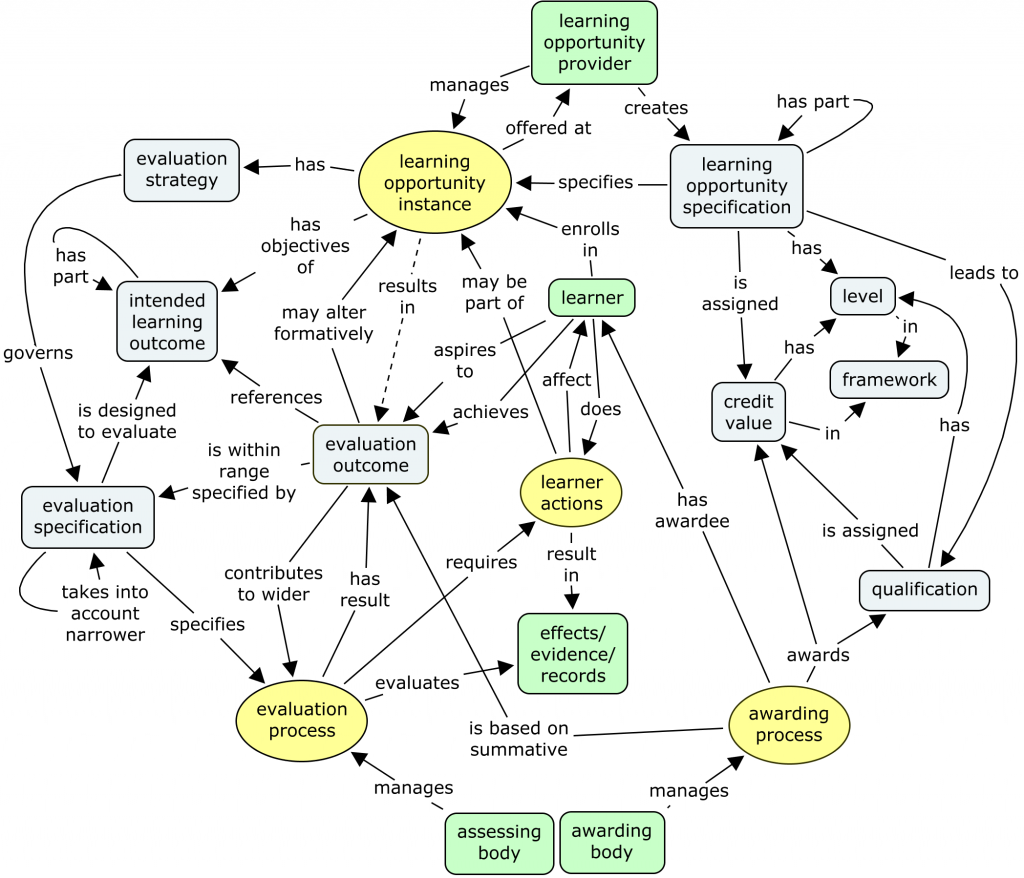

This leads to an opportunity for me to round up. There is so much that could be contributed to by e-portfolio practice and tools; and the sense of this conference was that indeed, things are set to move forward. But it still depends on matters which are not fully and generally understood. There is this issue of representing skills/competences/abilities which will not go away until dealt with satisfactorily (beyond TENCompetence), and alongside that, the issue of assessment of those in a way which makes sense to employers (and of which the results can be machine processed). That “hard” assessment needs to be reconciled with the more humane e-portfolio based assessment, which I think everyone agrees is already very good to get a feel for those last few short-listable candidates. Portfolio tools still have a way to go until they are relevant for search and automatic matching.

But my opinion is that progress here, and elsewhere, can definitely be made.