This is the first of a series of posts summarizing the technical aspects of the JISC

Curriculum Design Programme, based on a series of discussions between CETIS and the projects. These yearly discussions have been annotated and recorded in our PROD database.

The programme is well into its final year with projects due to finish at the end of July 2012. Instead of a final report, the projects are being asked to submit a more narrative institutional story of their experiences. As with any long running programme, in this instance, four years, a lot has changed since the projects started both within institutions themselves and in the wider political context the UK HE sector now finds itself.

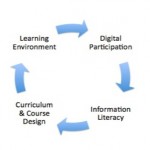

At the beginning of the programme, the projects were put into clusters based on three high level concepts they (and indeed the programme) were trying to address

• Business processes – Cluster A

• Organisational change – Cluster B

• Educational principles/curriculum design practices – Cluster C

I felt that it would be useful to summarize my final thoughts or my view of overall technical journey of the programme – this maybe a mini epic! This post will focus on the Cluster C projects, OULDI (OU), PiP (University of Strathclyde) and Viewpoints (University of Ulster). These projects all started with explicit drivers based on educational principles and curriculum design practices.

OULDI (Open University Learning Design Initiative)

*Project Prod Entry

The OULDI project, has been working towards “ . . .develop and implement a methodology for learning design composed of tools, practice and other innovation that both builds upon, and contributes to, existing academic and practioner research.”

The team have built up an extensive toolkit around the design process for practitioners, including: Course Map template, Pedagogical Features Card Sort, Pedagogy Profiler and Information Literacies Facilitation Cards.

The main technical developments for the project have been the creation of the Cloudworks site and the continued development of theCompendium LD learning design tool.

Cloudworks, and its open source version CloudEngine is one of the major technical outputs for the programme. Originally envisioned as a kind of flickr for learning designs, the site has evolved into something slightly different “a place to share, find and discuss learning and teaching ideas and experiences.” In fact this evolution to a more discursive space has perhaps made it a far more flexible and richer resource. Over the course of the programme we have seen the development from the desire to preview learning designs to last year LAMS sequences being fully embedded in the site; as well as other embedded resources such as video diaries from the teams partners.

The site was originally built in Drupal, however the team made a decision to switch to using Codeigniter. This has given them the flexibility and level control they felt they needed. Juliette Culver has written an excellent blog post about their decision process and experiences.

Making the code open source has also been quite a learning curve for the team which they have been documenting and they plan to produce at least one more post aimed at developers around some of the practical lessons they have learned. Use of Cloudworks has been growing, however take up of the open-source version hasn’t been quite as popular an option. I speculated with the team that perhaps it was simply because the original site is so user-friendly that people don’t really see the need to host their own version. However I think that having the code available as open source can only be a “good thing”, particularly for a JISC funded project. Perhaps some more work on showing examples of what can be done with the API (e.g. building on the experiments CETIS did for our 2010 Design Bash ) might be a way to encourage more experimentation and integration of parts of the site in other areas, which in turn might led to the bigger step of implementing a stand alone version. That said, sustaining the evolution of Cloudworks is a key issue for the team. In terms of internal institutional sustainability there is now commitment to it and it has being highlighted in various strategy papers particularly around enhancing staff capability.

Compendium LD has also developed over the programme life-cyle. Now PC, Mac and Linux versions are available to download. There is also additional help built into the tool linking to Cloudworks, and a prototype areas for sharing design maps . The source code is also available under a GNU licence. The team have created a set of useful resources including a useful video introduction, and a set of user guides. It’s probably fair to say that Compendium LD is really for “expert designers”, however the team have found the icon set used in the tool really useful in f2f activities around developing design literacies and using them as part of a separate paper-based output.

Viewpoints

*Project Prod Entry

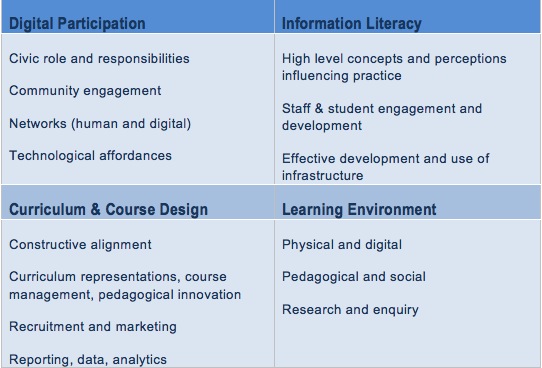

The project focus has focused on the development and facilitation of its set of curriculum re-design workshops. “We aim to create a series of user-friendly reflective tools for staff, promoting and enhancing good curriculum design.”

The Viewpoints process is now formally embedded the institutional course re-validation process. The team are embarking on a round of ‘train the trainer’ workshops to create a network of Viewpoints Champions to cascade throughout the University. A set of workshop resource packs are being developed which will be available via a booking system (for monitoring purposes) through the library for the champions. The team have also shared a number of outputs openly through a variety of channels including delicious , flickr and slideshare.

The project has focused on f2f interactions, and are using now creating video case studies from participants which will be available online over the coming months. The team had originally planned on building an online narration tool to complement (or perhaps even replace) the f2f workshops. However they now feel that the richness of the workshops could not be replaced with an online version. But as luck would have it, the Co-Educate project is developing a widget based on the 8-LEM model, which underpins much of the original work on which Viewpoints evolved, and so the project is discussing ways to input and utilize this development which should be available by June.

Early in the project, the team explored some formal modelling approaches, but found that a lighter weight approach using Balsamiq particularly useful for their needs. It proved to be effective both in terms of rapid prototyping and reducing development time, and getting useful engagement from end users. Balsamiq, and the rapid prototyping approach developed through Viewpoints is now being used widely by the developers in other projects for the institution.

Due to the focus on developing the workshop methodology there hasn’t been as much technical integration as originally envisaged. However, the team has been cognisant of institutional processes and workflows. Throughout the project the team have been keen to enable and build on structured data driven approaches allowing data to be easily re-purposed.

The team are now involved in the restructuring of a default course template area for all courses in their VLE. The template will pull in a variety of information sources from the library, NSS, assignment dates as well as a number of the frameworks and principles (e.g. assessment) developed through the project. So there is a logical progression from the f2f workshop, to course validation documentation, to what the student is presented with. Although the project hasn’t formally used XCRI they are noting growing institutional interest in it and data collection in general.

The team would like to continue with a data driven approach and see the development of their timetabling provision to make it more personalised for students.

PiP (Principles in Patterns)

*Project Prod Entry

The aims of the PiP project are:

” (i) develop and test a prototype on-line expert system and linked set of educational resources that, if adopted, would:

· improve the efficiency of course and class approval processes at the University of Strathclyde

· help stimulate reflection about the educational design of classes and courses and about the student experiences they would promote

· support the alignment of course and class provision with institutional policies and strategies

(ii) use the findings from (i) to share lessons learned and to produce a set of recommendations to the University of Strathclyde and to the HE sector about ways of improving class and course approval processes”

Unlike OULDI and Viewpoints, this project was less about f2f engagement supporting staff development in terms of course design, and focused on designing and building a system built on educationally proven methodology (e.g. The Reap Project). In terms of technical outputs, in some ways the outputs and experiences of the team actually mirrored more of those from the projects in Cluster B as PiP, like T-SPARC has developed a system based on Sharepoint, and like PALET has used Six Sigma and Lean methodologies.

The team have experimented extensively with a variety of modelling approaches, from UML and BPMN via a quick detour exploring Archi, for their base-lining models to now adopting Visio and the Six Sigma methodology. The real value of modelling is nearly always the conversations the process stimulates, and the team have noticed a perceptible change within the institution around attitudes towards, and the recognition of the importance of understanding and sharing core business processes. The project process workflow diagram is one I know I have found very useful to represent the complexity of course design and approval systems.

The team now have a prototype system, C-CAP, built on Sharepoint which is being trialled at the moment. The team are currently reflecting on the feedback so far via the project blog. This recent post outlines some of the divergent information needs within the course design and approval process. I’m sure many institutions could draw parallels with these thoughts and I’m sure the team would welcome feedback.

In terms of the development of the expert system, they team has had to deal with a number of challenges in terms of the lack of institutional integration between systems. Sharepoint was a common denominator, and so an obvious place to start. However, over the course of the past few years, there has been a re-think about development strategies. Originally it was planned to build the system using a .Net framework approach. Over the past year the decision was made to change to take an InfoPath approach. In terms of sustainability the team see this as being far more effective and hope to see a growing number of power users as apposed to specialist developers, which the .Net approach would have required. The team will be producing a blog post sharing the developers experience of building the system through the InfoPath approach.

Although the team feel they have made inroads around many issues, they do still see issues institutionally particularly around data collection. There is still ambiguity about use of terms such as course, module, programme between faculties. Although there is more interest in data collection in 2012 than in 2008 from senior management, there is still some work to be done around the importance and need for consistency of use.

So from this cluster, a robust set of tools for engaging practitioners with resources to help kick start the (re) design process and a working prototype to move from the paper based resources into formal course approval documentation.

![]() It will still be primarily informational, but we are now also going to start listing followers where possible, and hopefully make the @jisccetis twitter page a bit more useful too.

It will still be primarily informational, but we are now also going to start listing followers where possible, and hopefully make the @jisccetis twitter page a bit more useful too.